Automating Triage Using External Monitoring Signals

When incidents strike, every second counts. Yet many teams still rely on manual processes to determine which alerts need immediate attention and which can wait. By automating triage using external monitoring signals, you can dramatically reduce the time between detection and resolution while ensuring the right people work on the right problems.

External monitoring signals provide an unbiased view of your system's health from the user's perspective. Unlike internal metrics that might show everything running smoothly, external monitors catch issues that directly impact customer experience. When combined with intelligent automation, these signals transform chaotic incident response into a streamlined process.

Understanding External Monitoring Signals

External monitoring signals come from synthetic checks, real user monitoring, and third-party status pages that observe your services from outside your infrastructure. These signals offer unique insights:

Synthetic monitoring: Automated scripts that simulate user interactions from various geographic locations

Real user monitoring (RUM): Actual user experience data collected from browsers and mobile apps

Third-party status pages: Health information from your vendors and dependencies

API availability checks: External verification of your API endpoints

DNS and SSL certificate monitoring: Critical infrastructure components visible from outside

Each signal type provides different context for triage decisions. Synthetic monitoring might detect regional outages, while RUM data reveals performance degradation affecting specific user segments.

The Challenge of Manual Triage

Manual triage creates several problems that compound during incidents:

Alert fatigue: Engineers become desensitized to constant notifications, potentially missing critical issues among the noise.

Inconsistent prioritization: Different team members may assess severity differently based on their experience and understanding.

Context switching: Manually gathering information from multiple sources delays response time.

Knowledge gaps: Not everyone knows which services are business-critical or how they interconnect.

These challenges multiply when dealing with complex architectures involving multiple vendors and microservices. Building a knowledge base from past incidents helps, but automation takes efficiency to the next level.

Building an Automated Triage System

Successful automation requires thoughtful design and clear business rules. Here's how to build a system that actually helps:

1. Define Clear Severity Levels

Create objective criteria for each severity level based on business impact:

Critical (P1): Customer-facing services down, revenue impact, data loss risk

High (P2): Degraded performance affecting many users, key features unavailable

Medium (P3): Limited impact, workarounds available, internal tools affected

Low (P4): Cosmetic issues, minor bugs, optimization opportunities

2. Map External Signals to Business Impact

Connect monitoring data to specific business outcomes:

Payment gateway down = Direct revenue loss

Login service degraded = Customer frustration and support tickets

CDN issues = Slow page loads affecting conversion rates

Third-party API failures = Feature degradation

This mapping enables automated systems to make intelligent triage decisions based on actual business priorities.

3. Implement Smart Routing Rules

Configure your automation to route incidents based on:

Service ownership: Direct alerts to the team responsible for the affected service.

Time of day: Route to on-call engineers during off-hours, full team during business hours.

Geographic impact: Escalate region-wide issues faster than isolated problems.

Customer tier: Prioritize issues affecting enterprise customers or high-value segments.

4. Enrich Alerts with Context

Automated triage works best when alerts include relevant context:

Recent deployments or configuration changes

Current traffic levels and patterns

Related incidents or ongoing maintenance

Historical data about similar issues

Dependency status from external services

Integrating External Monitoring Tools

Modern monitoring platforms offer APIs and webhooks that enable automation. Key integration points include:

Synthetic monitoring platforms: Connect tools like Pingdom, Datadog Synthetics, or New Relic to feed external perspective into your triage logic.

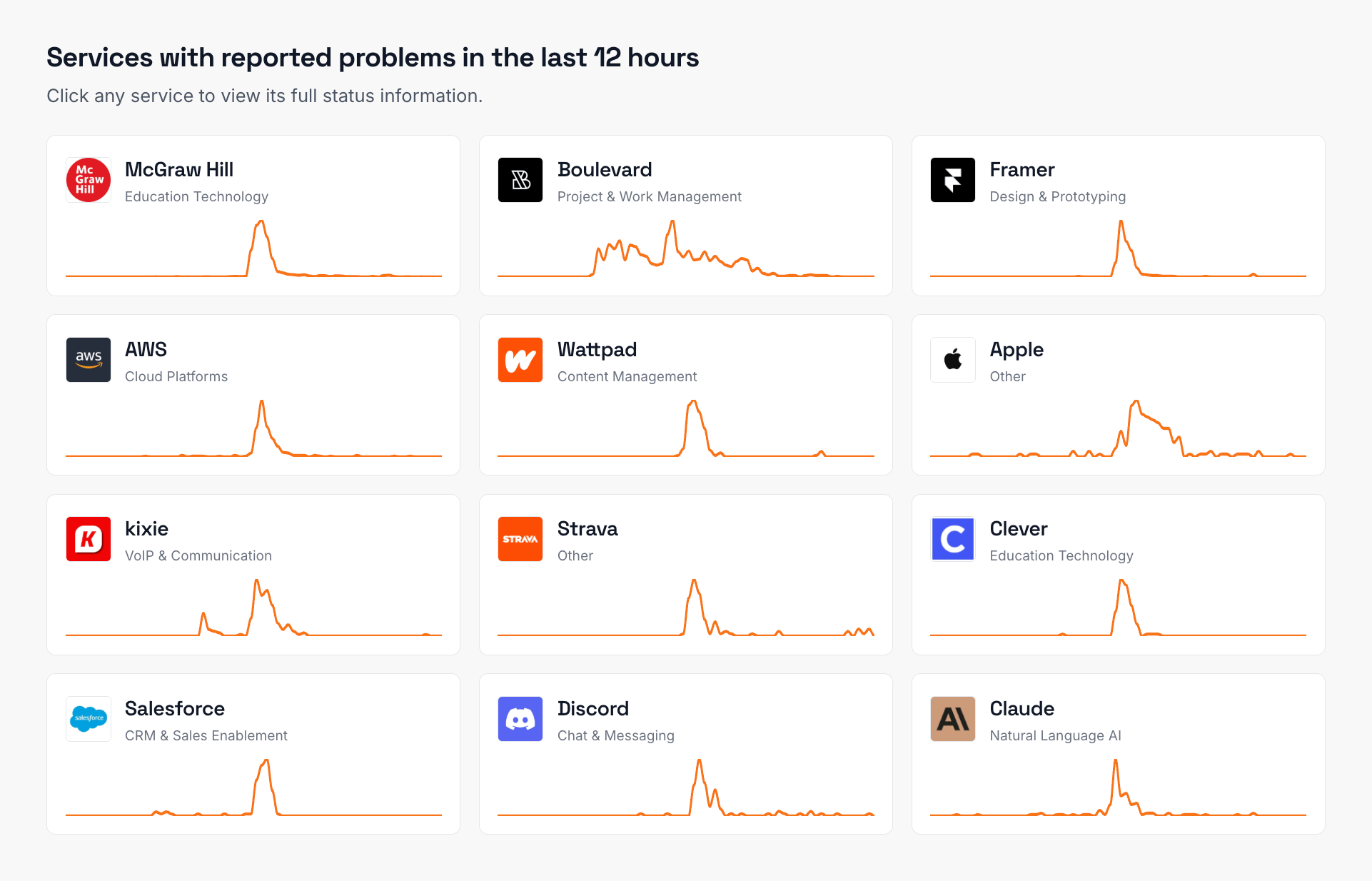

Status page aggregators: Services like IsDown's status page aggregator consolidate vendor health information, providing crucial context about third-party dependencies.

Incident management platforms: Tools like PagerDuty, Opsgenie, or Incident.io can execute your triage rules and manage escalations.

Collaboration tools: Automatically create focused incident channels in Slack or Teams with relevant stakeholders.

Practical Implementation Examples

Let's examine real-world scenarios where automating triage using external monitoring signals makes a difference:

E-commerce Platform

An online retailer implements automated triage that:

Monitors checkout process via synthetic transactions every minute

Detects payment gateway timeout from external monitoring

Automatically creates P1 incident with revenue impact calculation

Pages payment team lead and creates war room channel

Pulls recent payment provider status updates

Notifies customer service about potential order issues

Result: 15-minute reduction in detection-to-resolution time during Black Friday.

SaaS Application

A project management SaaS uses external signals to:

Track API response times from multiple regions

Detect performance degradation in EU region

Automatically assess impact based on active user count

Create P2 incident and assign to infrastructure team

Scale up resources automatically while team investigates

Update status page with targeted regional notification

Result: 70% reduction in customer-reported incidents.

Financial Services

A fintech company leverages automation for:

Monitor third-party KYC provider availability

Detect vendor outage via status page aggregation and apply vendor outage prioritization to escalate critical issues first

Automatically switch to backup provider

Create P3 incident for tracking and follow-up

Queue affected transactions for reprocessing

Generate compliance report for regulators

Result: Zero customer impact during vendor outage.

Best Practices for Automated Triage

Success with automated triage requires ongoing refinement:

Start simple: Begin with clear, high-confidence rules before adding complexity.

Measure effectiveness: Track metrics like false positive rate, mean time to acknowledge, and escalation accuracy.

Regular reviews: Analyze triage decisions monthly to identify patterns and improvement opportunities.

Maintain override capability: Always allow human judgment to supersede automation when needed.

Document everything: Clear documentation ensures everyone understands how and why decisions are made.

Common Pitfalls to Avoid

Learn from others' mistakes:

Over-automation: Not every decision should be automated. Reserve human judgment for complex scenarios.

Rigid rules: Build flexibility into your system to handle edge cases and evolving business needs.

Ignoring feedback: Listen to your team about what's working and what isn't.

Poor signal quality: Ensure external monitors are reliable before using them for critical decisions.

Lack of testing: Regularly test your automation with simulated incidents.

Measuring Success

Track these metrics to validate your automated triage system:

Time to triage: How quickly incidents are categorized and routed

Accuracy rate: Percentage of correctly prioritized incidents

Escalation efficiency: How often incidents reach the right team first

MTTR improvement: Overall reduction in resolution time

Team satisfaction: Survey on-call engineers about their experience

When properly implemented, automating triage using external monitoring signals transforms incident response from reactive scrambling to proactive problem-solving. Teams spend less time figuring out what's wrong and more time fixing it.

Frequently Asked Questions

What's the difference between internal and external monitoring signals for triage?

Internal monitoring signals come from within your infrastructure (server metrics, application logs), while external monitoring signals observe your services from the outside, like a user would. External signals are crucial for automating triage using external monitoring signals because they catch issues that internal monitoring might miss, such as DNS problems, CDN failures, or regional network issues.

How do I prevent automated triage from creating alert fatigue?

Implement smart deduplication rules, aggregate related alerts into single incidents, and continuously tune your thresholds based on actual impact. Set up escalation policies that only notify additional people when truly necessary, and use automation to suppress low-priority alerts during major incidents.

Can automated triage work with multiple monitoring tools?

Yes, modern incident management platforms can ingest signals from various monitoring tools through APIs and webhooks. The key is normalizing data from different sources into a common format your triage rules can process. Many teams use middleware or integration platforms to coordinate between tools.

What external monitoring signals are most important for automated triage?

The most critical signals typically include synthetic transaction success rates, real user experience metrics, third-party service availability, and geographic availability patterns. The specific mix depends on your architecture and business model. E-commerce sites might prioritize checkout flow monitoring, while API-first companies focus on endpoint availability.

How often should I update my automated triage rules?

Review your triage rules monthly at minimum, and after every major incident or architectural change. Look for patterns in false positives, missed escalations, or changing business priorities. Some teams implement A/B testing for triage rules to validate changes before full deployment.

What skills does my team need to implement automated triage effectively?

Your team needs a mix of technical and business understanding. Technical skills include API integration, scripting, and monitoring tool configuration. Business skills involve understanding service dependencies, customer impact, and priority trade-offs. Most importantly, they need strong communication skills to document and explain triage decisions.

Nuno Tomas

Founder of IsDown

Nuno Tomas

Founder of IsDown

The Status Page Aggregator with Early Outage Detection

Unified vendor dashboard

Early Outage Detection

Stop the Support Flood

Related articles

Never again lose time looking in the wrong place

14-day free trial · No credit card required · No code required