How to Reduce Downtime by 90% with Proactive Monitoring Strategies

Downtime costs businesses an average of $5,600 per minute according to Gartner research. For many organizations, even a few hours of unplanned outages can mean lost revenue, damaged reputation, and frustrated customers. The good news? You can reduce downtime by up to 90% by implementing the right proactive monitoring strategies.

This guide walks you through practical, proven methods to minimize downtime through early detection, automated responses, and strategic prevention measures that actually work in production environments.

Understanding the Real Cost of Reactive vs. Proactive Approaches

Most teams operate reactively—they wait for something to break, then scramble to fix it. This approach guarantees longer downtimes because:

- Detection takes longer (often relying on customer complaints)

- Root cause identification happens under pressure

- Solutions are rushed and may introduce new problems

- Team morale suffers from constant firefighting

Proactive monitoring flips this model. By catching issues before they escalate, you can reduce unplanned downtime dramatically while maintaining team sanity.

The 5 Pillars of Proactive Monitoring

1. Comprehensive Service Coverage

You can't prevent what you can't see. Start by mapping every critical service, including:

- Internal applications and infrastructure

- Third-party APIs and SaaS dependencies

- Network components and CDNs

- Database and storage systems

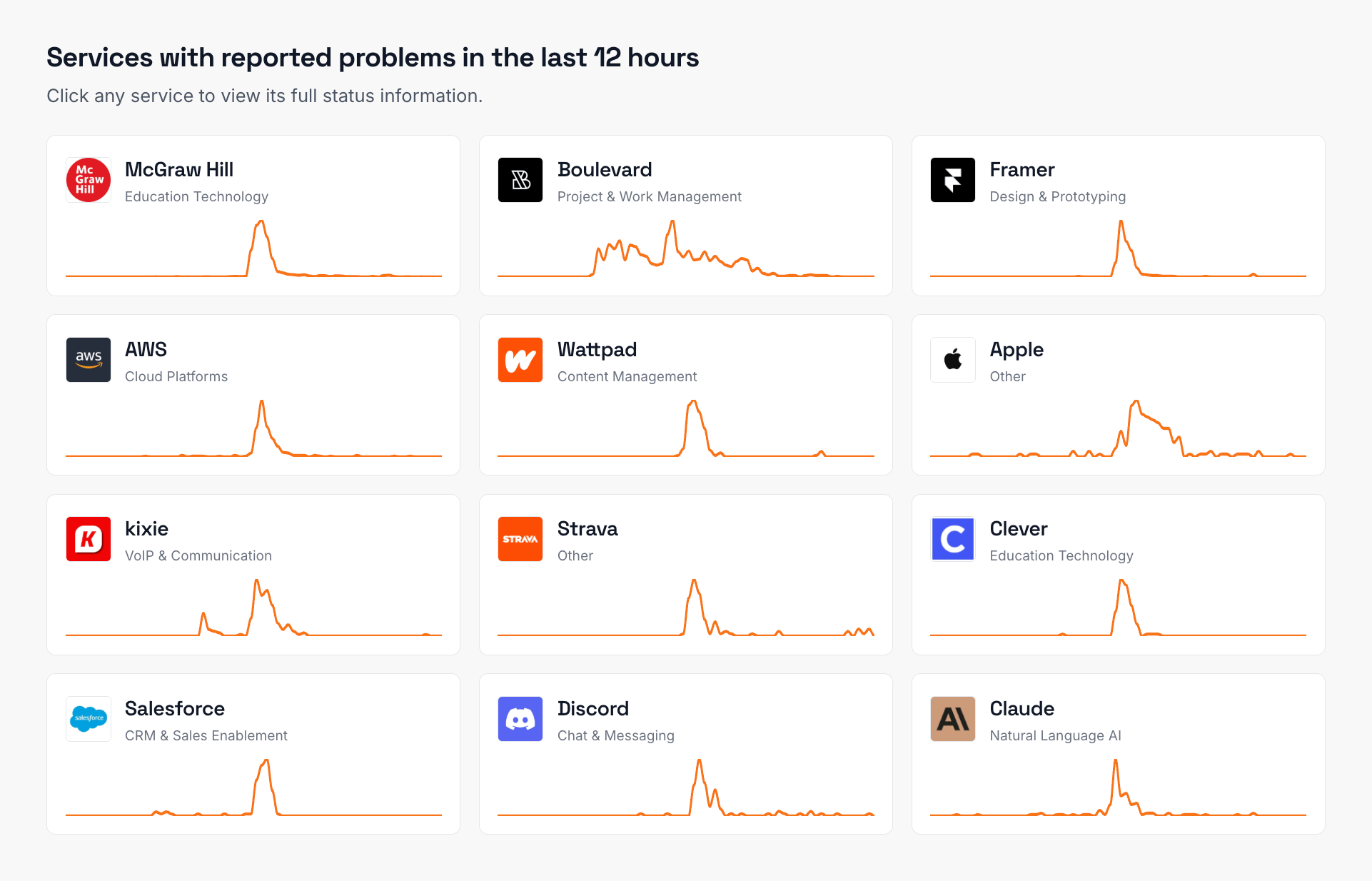

Many teams forget about external dependencies until they fail. Tools like status page aggregators can monitor all your third-party services from one dashboard, ensuring nothing slips through the cracks.

2. Intelligent Threshold Setting

Static thresholds lead to alert fatigue. Instead, implement:

- Dynamic baselines that adjust to normal usage patterns

- Anomaly detection for unusual behavior

- Composite alerts that trigger only when multiple conditions align

- Escalating severity based on duration and impact

For example, a CPU spike during scheduled backups is normal. The same spike at 2 PM on a Tuesday warrants investigation.

3. Automated Response Workflows

When issues are detected, every second counts. Automation can:

- Scale resources automatically during traffic spikes

- Restart hung services

- Failover to backup systems

- Roll back problematic deployments

- Create incident tickets with relevant context

These automated responses can resolve many issues before they impact users, helping you minimize downtime without manual intervention.

4. Predictive Analytics and Trend Analysis

Historical data reveals patterns that predict future failures:

- Disk space consumption trends

- Memory leak patterns

- API response time degradation

- Error rate increases

By analyzing these trends, you can schedule preventive maintenance during low-impact windows rather than dealing with emergency outages.

5. Cross-Team Visibility and Communication

Silos kill response times. Ensure:

- All teams have access to monitoring dashboards

- Alerts route to the right people immediately

- Status updates flow automatically to stakeholders

- Post-incident reviews involve all relevant parties

Implementing Your Proactive Monitoring Strategy

Phase 1: Audit and Assessment (Week 1-2)

Start by documenting:

- All services and dependencies

- Current monitoring coverage

- Recent incident patterns

- Team response procedures

Identify the biggest gaps first. If you're missing visibility into critical third-party services, that's often the easiest win.

Phase 2: Tool Selection and Setup (Week 3-4)

Choose monitoring tools that:

- Integrate with your existing stack

- Support your specific service types

- Provide flexible alerting options

- Scale with your growth

For comprehensive coverage, you'll likely need a combination of infrastructure monitoring, APM, and external service monitoring solutions.

Phase 3: Alert Tuning and Automation (Week 5-8)

This is where most teams stumble. Start conservatively:

- Set up basic health checks

- Monitor for one week

- Adjust thresholds based on false positives

- Add automation for clear-cut scenarios

- Gradually expand coverage

Phase 4: Process Integration (Ongoing)

Make monitoring part of your development lifecycle:

- Add monitoring requirements to definition of done

- Include alert setup in deployment checklists

- Review monitoring data in sprint retrospectives

- Update thresholds as systems evolve

Measuring Success: Key Metrics to Track

To verify your strategy works, monitor:

- Mean Time to Detection (MTTD): Should decrease by 50-70%

- Mean Time to Resolution (MTTR): Target 40-60% reduction

- Incident frequency: Expect initial increase, then steady decline

- False positive rate: Keep below 10%

- Automation success rate: Track how often automated responses work

Common Pitfalls and How to Avoid Them

Alert Fatigue

Too many alerts lead to ignored alerts. Combat this by:

- Grouping related alerts

- Setting appropriate severity levels

- Using intelligent routing

- Regularly reviewing and pruning alerts

Incomplete Coverage

Teams often monitor infrastructure but miss:

- Third-party API dependencies

- Client-side performance

- Business logic errors

- Data pipeline health

Lack of Context

Alerts without context waste precious time. Include:

- Recent changes or deployments

- Current traffic levels

- Related service status

- Suggested remediation steps

Real-World Results

Organizations implementing comprehensive proactive monitoring typically see:

- 70-90% reduction in unplanned downtime

- 50% faster incident resolution

- 80% fewer customer-reported issues

- 60% reduction in on-call burden

The key is consistency. Downtime prevention isn't a one-time project—it's an ongoing practice that improves with iteration.

Getting Started Today

You don't need to implement everything at once. Start with:

- Monitor your most critical service end-to-end

- Set up one automated response for a common issue

- Create a simple dashboard visible to all teams

- Run one post-incident review using monitoring data

Each small improvement compounds. Within 90 days, you'll see significant reductions in both frequency and duration of outages.

Proactive monitoring isn't about predicting the future—it's about being prepared for it. By implementing these strategies systematically, you can transform from a reactive firefighting mode to a proactive stance that keeps your services running smoothly and your team sleeping soundly.

Frequently Asked Questions

What's the fastest way to reduce downtime in my organization?

The fastest way to reduce downtime is to implement comprehensive monitoring for both internal systems and external dependencies, combined with automated response workflows for common issues. Start with your most critical services and expand coverage gradually.

How much should we invest in monitoring tools?

A good rule of thumb is to invest 5-10% of your potential downtime cost in monitoring and prevention. Calculate your hourly downtime cost, estimate annual downtime hours without proper monitoring, and use that to justify investment in tools and processes.

Can proactive monitoring completely eliminate downtime?

While proactive monitoring dramatically reduces unplanned downtime, it cannot eliminate it entirely. The goal is to minimize both frequency and duration of incidents while ensuring rapid detection and response when issues do occur.

How do we monitor third-party services we don't control?

Use status page aggregators or monitoring tools that track external service health. These solutions monitor vendor status pages, API endpoints, and performance metrics to alert you about third-party issues before they impact your users.

What's the difference between monitoring and observability?

Monitoring tells you when something is wrong based on predefined metrics and thresholds. Observability provides deep insights into system behavior, allowing you to understand why issues occur and discover problems you didn't know to look for.

How often should we review and update our monitoring strategy?

Review your monitoring strategy quarterly at minimum, and after any major incident or system change. Regular reviews ensure your monitoring evolves with your infrastructure and catches new failure modes as they emerge.

Nuno Tomas

Founder of IsDown

Nuno Tomas

Founder of IsDown

The Status Page Aggregator with Early Outage Detection

Unified vendor dashboard

Early Outage Detection

Stop the Support Flood

Related articles

Never again lose time looking in the wrong place

14-day free trial · No credit card required · No code required