MTTD vs MTTR: Master Key Incident Response Metrics

When system failures strike, every second counts. The difference between a minor hiccup and a major outage often comes down to how quickly you detect and respond to incidents. That's where MTTD (Mean Time to Detect) and MTTR (Mean Time to Respond or Resolve) become your most critical performance indicators.

Understanding MTTD: Your Early Warning System

Mean Time to Detect (MTTD) measures the average time it takes to identify that an incident has occurred. This metric starts when a problem begins and ends when your team receives an alert or otherwise becomes aware of the issue.

Calculating MTTD is straightforward:

- Total time to detect all incidents ÷ Total number of incidents = MTTD

For example, if you had 5 incidents last month with detection times of 2, 5, 3, 8, and 7 minutes, your MTTD would be (2+5+3+8+7) ÷ 5 = 5 minutes.

Decoding MTTR: Multiple Meanings, One Goal

MTTR can stand for different things depending on your organization:

Mean Time to Respond: The time between detection and when work begins

Mean Time to Resolve: The time from detection to full resolution

Mean Time to Repair: The time spent actively fixing the issue

Mean Time to Recovery: The time until normal operations resume

Regardless of which definition you use, the calculation remains similar:

- Total time (to respond/resolve/repair/recover) ÷ Number of incidents = MTTR

The Relationship Between Detection and Response

MTTD and MTTR work together to paint a complete picture of your incident management capabilities. While MTTD focuses on awareness, MTTR measures action. Together, they represent the total time from when an incident occurs to when it's resolved.

Consider this scenario: A database goes down at 2:00 PM. Your monitoring detects it at 2:03 PM (MTTD = 3 minutes). Your team starts working on it at 2:05 PM and resolves it by 2:20 PM (MTTR = 17 minutes from detection). The total downtime is 20 minutes.

Other Critical Reliability Metrics

While MTTD and MTTR are essential, they're part of a larger ecosystem of KPIs:

MTBF (Mean Time Between Failures): Measures the average time between system failures. Calculate by dividing total operational time by the number of failures.

MTTF (Mean Time to Failure): Similar to MTBF but used for non-repairable systems. It measures the expected time until the first failure.

MTTA (Mean Time to Acknowledge): The time between alert generation and team acknowledgment. This bridges the gap between detection and response.

Best Practices to Optimize MTTD

Reducing detection time requires proactive strategies:

Implement Real-Time Monitoring: Deploy comprehensive monitoring across all critical systems. This includes application performance monitoring, infrastructure monitoring, and synthetic monitoring for user-facing services.

Set Intelligent Alerts: Configure alerts based on meaningful thresholds. Too sensitive, and you'll face alert fatigue. Too lenient, and you'll miss critical issues. Effective alert management balances sensitivity with actionability.

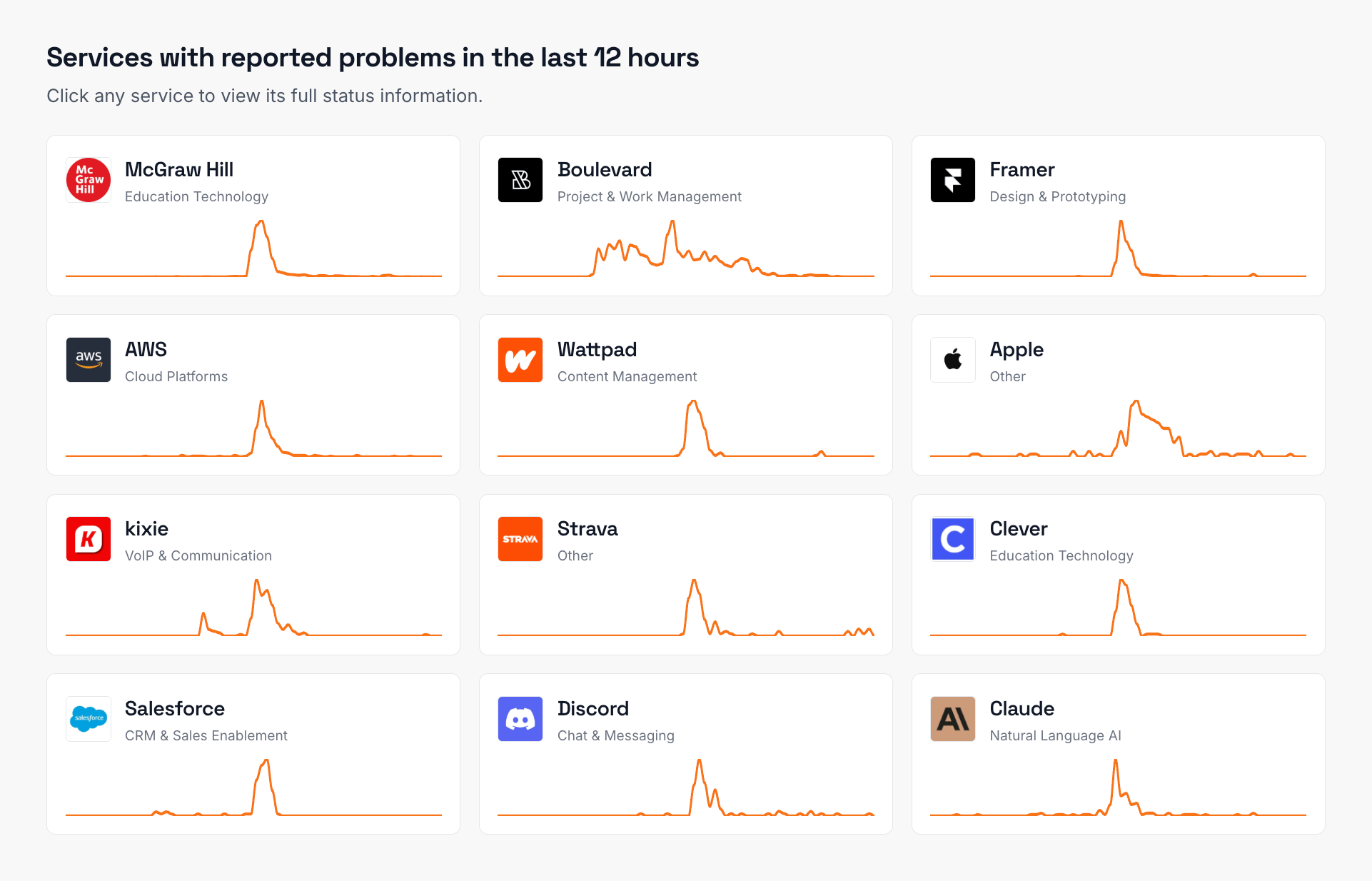

Monitor Dependencies: Track third-party services and APIs that your system relies on. External service failures can cascade into your environment, so early detection is crucial. Using vendor outage monitoring helps teams stay informed about upstream issues before they impact your own services.

Use Anomaly Detection: Machine learning can identify unusual patterns before they become full-blown incidents. This predictive approach can dramatically reduce MTTD.

Strategies to Improve MTTR

Once you've detected an issue, swift resolution becomes paramount. Focusing on minimizing MTTR ensures faster recovery times, reduces downtime impact, and helps teams maintain higher service reliability:

Prioritize Incidents Effectively: Not all incidents are equal. Establish clear severity levels and ensure your team knows which issues to tackle first based on business impact.

Streamline Communication: Create dedicated incident response channels. Whether using Slack, Microsoft Teams, or specialized incident management platforms, ensure information flows quickly to the right people.

Maintain Updated Runbooks: Document common issues and their solutions. When incidents occur, your team shouldn't waste time figuring out basic troubleshooting steps.

Automate Where Possible: Implement auto-remediation for known issues. If a service can be safely restarted automatically, why wait for human intervention?

Measuring and Tracking Progress

To improve these metrics, you must measure them consistently:

Establish Baselines: Document your current MTTD and MTTR across different incident types

Set Realistic Goals: Aim for incremental improvements rather than dramatic changes

Track Trends: Monitor whether your metrics improve over time

Segment by Severity: High-severity incidents might have different targets than low-severity ones

Regular reviews help identify patterns. Maybe your MTTD spikes during certain hours, indicating monitoring gaps. Or perhaps specific incident types consistently have high MTTR, suggesting a need for better documentation or training.

Common Pitfalls to Avoid

While pursuing better metrics, watch out for these mistakes:

Gaming the Numbers: Teams might close incidents prematurely or split them unnecessarily to improve metrics. This defeats the purpose and masks real problems.

Ignoring Root Causes: Fast detection and response are good, but preventing incidents is better. Balance reactive metrics with proactive measures.

One-Size-Fits-All Targets: A critical payment system outage demands faster response than a non-essential reporting delay. Tailor your targets to service importance.

Neglecting Human Factors: Burnout from constant alerts or pressure to meet unrealistic targets can actually worsen performance. Consider team well-being in your optimization efforts.

Building a Culture of Continuous Improvement

Improving MTTD and MTTR isn't just about tools and processes—it's about culture. Foster an environment where:

Teams feel empowered to suggest improvements

Failures become learning opportunities, not blame sessions

Cross-functional collaboration is the norm

Investment in reliability is valued alongside feature development

Regular incident response metrics reviews help teams understand their performance and identify improvement areas. Make these reviews constructive, focusing on systemic improvements rather than individual performance.

The Technology Stack for Better Metrics

Modern incident management relies on integrated tooling:

Monitoring Platforms: Solutions like Datadog, New Relic, or Prometheus provide the raw data for detection.

Alerting Systems: PagerDuty, Opsgenie, or built-in platform alerting ensure the right people know about issues immediately.

Incident Management Tools: Platforms like Incident.io, FireHydrant, or Rootly help coordinate response efforts and track metrics.

Status Page Aggregators: Services like IsDown consolidate external service statuses, helping teams quickly identify when third-party dependencies cause issues.

Looking Forward: The Evolution of Incident Metrics

As systems grow more complex and user expectations rise, these metrics become increasingly critical. Future trends include:

AI-powered predictive detection reducing MTTD to near-zero for many incident types

Automated response playbooks that can resolve common issues without human intervention

More sophisticated metrics that account for business impact, not just technical resolution

Integration of customer experience metrics with traditional reliability indicators

The organizations that master these metrics today position themselves for success in an increasingly digital future. Start by establishing baselines, then work systematically to improve both detection and response capabilities.

Frequently Asked Questions

What's the ideal MTTD and MTTR for most organizations?

There's no universal ideal for MTTD and MTTR as it depends on your industry, system criticality, and user expectations. Financial services might target MTTD under 1 minute and MTTR under 15 minutes for critical systems. E-commerce platforms might accept 5-minute MTTD but require faster MTTR during peak shopping periods. Focus on continuous improvement from your baseline rather than arbitrary targets.

How do MTBF and MTTF relate to system reliability?

MTBF (Mean Time Between Failures) and MTTF (Mean Time to Failure) measure how long systems operate without failing. MTBF applies to repairable systems and measures the average time between failures, while MTTF applies to non-repairable components and measures time until the first failure. Higher values indicate better reliability, complementing MTTD and MTTR which focus on failure response.

Should we prioritize improving MTTD or MTTR first?

Start with MTTD improvements since you can't fix what you don't know about. Faster detection often naturally leads to faster resolution as teams aren't playing catch-up. However, if your MTTD is already strong (under 5 minutes) but MTTR is poor (over an hour), shift focus to response optimization through better runbooks, automation, and training.

How do you calculate MTTR when some incidents span multiple days?

When calculating MTTR for long-running incidents, use the same formula: total resolution time divided by number of incidents. Include the full duration even if it spans days. For better insights, segment your metrics by incident severity or type, as mixing minor issues resolved in minutes with major outages lasting days can obscure meaningful patterns.

What's the difference between MTTA and MTTR?

MTTA (Mean Time to Acknowledge) measures the time between when an alert fires and when someone acknowledges they're investigating. MTTR typically measures from detection to resolution. MTTA is a subset of MTTR, helping identify if delays come from notification issues or actual resolution challenges. High MTTA often indicates alert routing problems or team availability issues.

How can we reduce alert fatigue while maintaining low MTTD?

Balance low MTTD with alert quality by implementing intelligent thresholds, grouping related alerts, and using escalation policies. Set different notification methods for different severities—critical issues might page immediately while warnings email. Regular alert audits help eliminate noisy alerts that don't require action, maintaining team responsiveness for real incidents.

Nuno Tomas

Founder of IsDown

Nuno Tomas

Founder of IsDown

The Status Page Aggregator with Early Outage Detection

Unified vendor dashboard

Early Outage Detection

Stop the Support Flood

Related articles

Never again lose time looking in the wrong place

14-day free trial · No credit card required · No code required