SRE Observability: Building Visibility Into System Health

SRE observability transforms how teams understand and manage complex systems by providing deep visibility into the internal state of applications and infrastructure. Unlike traditional monitoring that only tracks predefined metrics, observability enables SRE teams to ask new questions about system behavior and quickly identify root causes of issues.

Understanding the Three Pillars of Observability

The foundation of any observability platform rests on three core data types that work together to provide comprehensive system insights:

Metrics: The Pulse of Your System

Metrics are numerical measurements collected over time that help track system health and performance. These include CPU usage, memory consumption, request rates, and error percentages. SRE teams use metrics to establish baselines, detect anomalies, and measure against service level objectives.

Logs: The Story Behind the Numbers

Logs capture discrete events and provide context about what happened in your system at specific points in time. They contain detailed information about errors, user actions, and system state changes that metrics alone cannot reveal.

Traces: Following the Journey

Traces track requests as they flow through distributed systems, showing how different services interact and where bottlenecks occur. This visibility becomes crucial when troubleshooting performance issues across microservices architectures.

How Observability Differs from Traditional Monitoring

While monitoring tools alert you when something goes wrong, observability solutions help you understand why. Traditional monitoring systems track known issues and predefined thresholds. Observability platforms enable exploration of unknown problems by allowing teams to correlate data across multiple dimensions.

This distinction becomes critical as systems grow more complex. When an outage occurs, monitoring might tell you that response times increased, but observability reveals which specific service calls caused the slowdown and what internal state changes triggered the issue.

Building an Effective Observability Strategy

Start with Clear Objectives

Define what insights your team needs most. Focus on understanding user experience, system performance, and reliability goals. This clarity helps you choose the right observability tools and avoid collecting unnecessary data.

Instrument Thoughtfully

Add instrumentation that captures meaningful events and state changes. Avoid the temptation to log everything – excessive data creates noise and increases costs. Instead, focus on high-value touchpoints that reveal system behavior.

Standardize Data Collection

Create consistent formats for logs, metrics, and traces across all services. This standardization enables better correlation and makes it easier to aggregate data from different sources into unified dashboards.

Automate Where Possible

Use automation to reduce manual work in data collection, processing, and analysis. Automated anomaly detection can surface issues before they impact users, while automated correlation helps connect related events across different data streams.

Choosing the Right Observability Tools

Selecting observability solutions requires evaluating several factors:

Scalability: Can the platform handle your data volume as you grow? Look for tools that scale horizontally and offer flexible data retention policies.

Integration Capabilities: Does it work with your existing stack? The best platforms integrate seamlessly with your current monitoring tools and development workflows.

Query Flexibility: Can you ask arbitrary questions about your data? Powerful query languages enable deep investigation without predefined reports.

Cost Structure: How does pricing scale with usage? Consider both data ingestion and storage costs to avoid surprises as your observability practice matures.

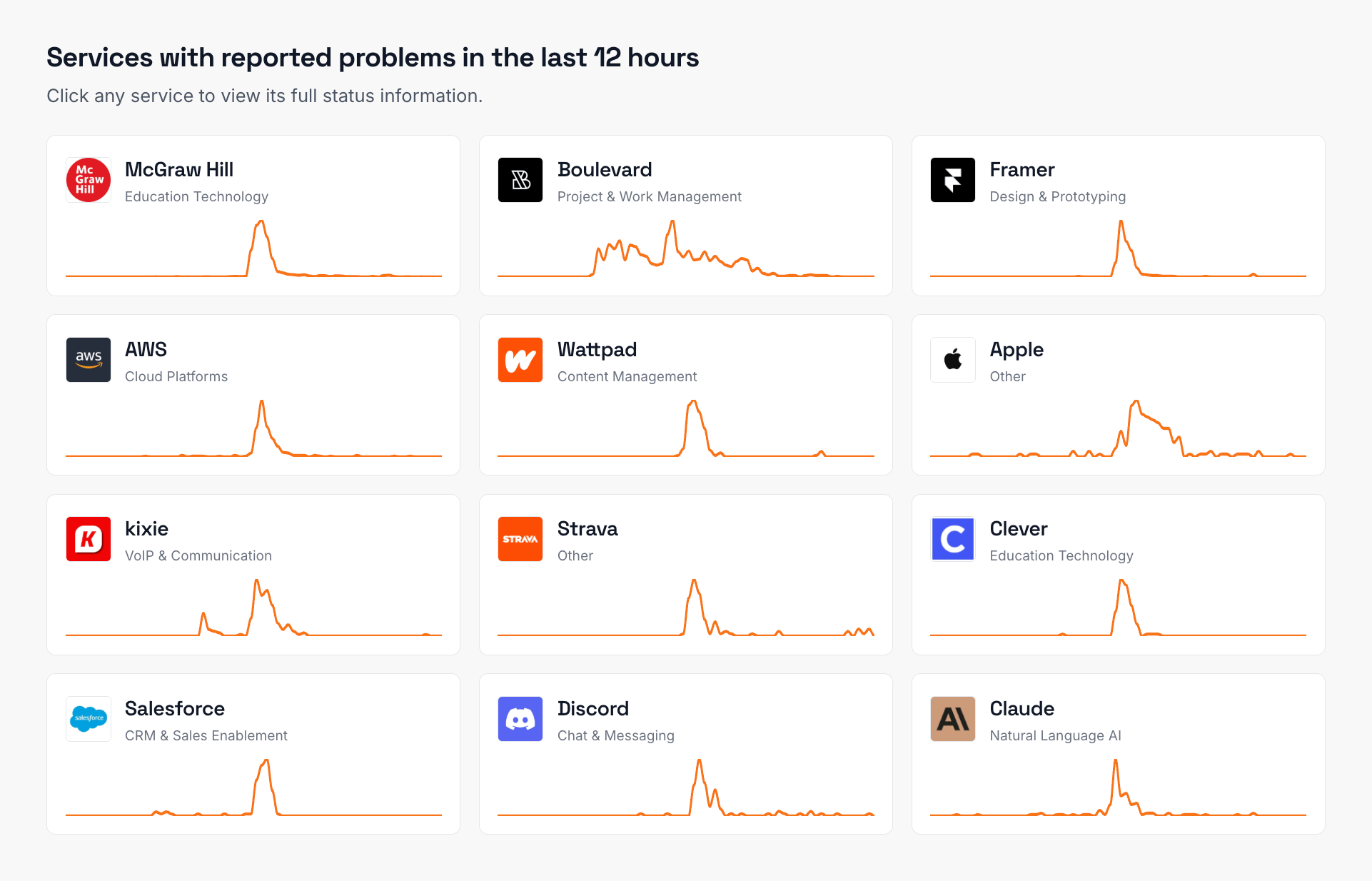

Implementing Observability for External Dependencies

Modern applications rely heavily on third-party services, making external dependency monitoring crucial for comprehensive observability. SRE teams need visibility not just into their own systems but also into the services they depend on.

This is where understanding the role of external service monitoring in SRE practices becomes essential. External services can impact your uptime and user experience just as much as internal issues, yet they exist outside your direct control.

Best Practices for SRE Observability

Establish Service Level Objectives First

Before implementing observability tools, define clear SLOs that reflect user expectations. These objectives guide what data to collect and which dashboards to build.

Create Actionable Dashboards

Metrics provide value only when teams can easily access and understand them. Pairing dashboards with a [public and private status page](https://isdown.app/blog/public-and-private-status-pages) gives both users and stakeholders a clear view of ongoing incidents and system health. Effective dashboards:

Display metrics in context with targets and trends

Highlight anomalies requiring attention

Enable drill-down for detailed analysis

Support different stakeholder perspectives

Practice Proactive Analysis

Don't wait for incidents to use your observability platform. Regular exploration of system behavior helps teams understand normal patterns and spot emerging issues early.

Foster a Culture of Curiosity

Encourage team members to explore data and ask questions. The most valuable insights often come from unexpected correlations discovered during routine investigation.

Common Observability Challenges and Solutions

Data Overload

Teams often struggle with too much data and not enough insight. Combat this by implementing sampling strategies, using aggregation effectively, and focusing collection on business-critical paths.

Tool Sprawl

Multiple disconnected observability tools create silos and complicate troubleshooting. Consider platforms that unify metrics, logs, and traces in a single interface, or invest in correlation tools that connect disparate systems.

Alert Fatigue

Poorly configured observability can generate excessive alerts. Implement intelligent alerting based on compound conditions and business impact rather than simple threshold breaches.

Measuring Observability Success

Track these indicators to assess your observability implementation:

Mean Time to Detection (MTTD): How quickly do you identify issues?

Mean Time to Resolution (MTTR): How fast can you fix problems once detected?

Proactive Issue Discovery: What percentage of issues do you find before users report them?

Question Resolution Time: How long does it take to answer new questions about system behavior?

When tracking these metrics, teams often focus on incident response metrics that directly impact customer experience and system reliability.

The Future of SRE Observability

Observability continues evolving with advances in machine learning and automation. Emerging trends include:

AI-Powered Anomaly Detection: Systems that learn normal behavior patterns and automatically flag deviations

Predictive Analytics: Tools that forecast potential issues based on historical patterns

Automated Root Cause Analysis: Platforms that correlate events across multiple data sources to identify probable causes

Edge Observability: Extending visibility to edge computing environments and IoT devices

These advancements promise to make observability more proactive and reduce the manual effort required to maintain system health.

Conclusion

SRE observability provides the foundation for understanding and optimizing complex systems. By implementing comprehensive observability practices, teams gain the insights needed to maintain reliability, improve performance, and deliver better user experiences. Start with the basics – metrics, logs, and traces – then expand your practice based on specific needs and challenges. Remember that observability is not just about tools but about building a culture of curiosity and continuous improvement.

Frequently Asked Questions

What is SRE observability and why is it important?

SRE observability is the practice of instrumenting systems to understand their internal state through external outputs like metrics, logs, and traces. It's crucial because it enables teams to diagnose issues quickly, optimize performance, and maintain reliability in complex distributed systems. Unlike traditional monitoring, observability allows teams to ask new questions and investigate unknown problems.

How do observability tools differ from monitoring tools?

Monitoring tools track predefined metrics and alert on known issues, while observability tools provide comprehensive visibility into system behavior through correlated data. Observability platforms enable exploration and investigation of unexpected problems, whereas monitoring systems primarily detect when specific thresholds are crossed. This flexibility makes observability essential for troubleshooting complex issues in modern architectures.

What are the essential components of an observability platform?

An effective observability platform includes metric collection for performance data, log aggregation for event details, and distributed tracing for request flow visibility. It should also provide powerful query capabilities, data correlation features, and customizable dashboards. Integration with existing tools and automation capabilities for alerting and analysis are equally important.

How can SRE teams implement observability without overwhelming their systems?

Start by identifying critical user journeys and instrumenting those paths first. Use sampling techniques to reduce data volume while maintaining statistical accuracy. Implement data retention policies that balance cost with investigative needs. Focus on high-value metrics and logs that directly relate to service level objectives rather than collecting everything possible.

What metrics should SRE teams prioritize for observability?

Prioritize the "golden signals": latency, traffic, errors, and saturation. These provide immediate insight into system health and user experience. Additionally, track business-specific metrics that align with your service level objectives. Custom metrics that reflect unique aspects of your architecture or user behavior often provide the most valuable insights for optimization and troubleshooting.

How does observability help with root cause analysis during incidents?

Observability accelerates root cause analysis by providing correlated data across multiple system layers. During incidents, teams can trace problematic requests, examine related logs, and analyze metric anomalies in a unified view. This comprehensive visibility reduces the time spent gathering information and enables faster hypothesis testing, ultimately leading to quicker resolution of issues.

Nuno Tomas

Founder of IsDown

Nuno Tomas

Founder of IsDown

The Status Page Aggregator with Early Outage Detection

Unified vendor dashboard

Early Outage Detection

Stop the Support Flood

Related articles

Never again lose time looking in the wrong place

14-day free trial · No credit card required · No code required