Incident Alerting: Build a System That Actually Works

When critical systems fail, every second counts. The difference between a minor hiccup and a major outage often comes down to how quickly your team receives and responds to incident alerts. Yet many organizations struggle with incident alerting systems that either overwhelm teams with noise or fail to notify the right people at the right time.

Effective incident alert management isn't just about having monitoring tools in place—it's about building a comprehensive system that detects issues early, routes alerts intelligently, and enables rapid response without burning out your team.

The Foundation of Effective Incident Alerting

Before diving into tools and tactics, it's crucial to understand what makes incident alerting work. At its core, a good alerting system serves three purposes:

Early detection: Catching problems before they impact users

Smart routing: Getting alerts to the right people with the right context

Actionable information: Providing enough detail to enable immediate response

Many teams make the mistake of setting up alerts for everything, then wondering why their on-call engineers are drowning in notifications. The key is building a system that balances comprehensive coverage with intelligent filtering.

Building Your Alert Architecture

A well-designed alert architecture starts with clear definitions. Not all alerts are created equal, and treating them as such leads to alert fatigue and missed critical issues.

Alert Severity Levels

Establish clear severity levels that everyone understands:

Critical: Service is down or severely degraded, immediate action required

High: Major functionality impaired, response needed within minutes

Medium: Performance issues or partial outages, response within hours

Low: Minor issues or warnings, can be addressed during business hours

Each severity level should have defined response times, escalation paths, and notification methods. Critical alerts might trigger phone calls and SMS messages, while low-priority alerts could simply create tickets for review.

Alert Routing Rules

Smart routing ensures alerts reach the right people without overwhelming everyone. Consider these routing strategies:

Team-based routing: Different teams handle different services or components. Database alerts go to the database team, API alerts to the backend team.

Time-based routing: Route alerts based on business hours and on-call schedules. After-hours alerts follow escalation paths while business-hour alerts might go to a broader group.

Skill-based routing: Complex issues require specific expertise. Route Kubernetes alerts to engineers with container experience, security alerts to the security team.

Geographic routing: For global teams, route alerts to the team currently working rather than waking up engineers halfway around the world.

Reducing Alert Noise

Alert fatigue is real and dangerous. When engineers receive dozens of alerts daily, they start ignoring notifications, and critical issues slip through. Here's how to reduce noise:

Intelligent Deduplication

Multiple monitoring tools often detect the same issue, creating duplicate alerts. Implement deduplication rules that:

Group related alerts into single incidents

Suppress duplicate notifications within time windows

Correlate alerts from different sources

Dynamic Thresholds

Static thresholds generate false positives during normal traffic variations. Instead, use:

Baseline learning to understand normal patterns

Percentage-based thresholds rather than absolute values

Time-of-day adjustments for predictable variations

Alert Dependencies

When a core service fails, dependent services generate cascading alerts. Map service dependencies and suppress downstream alerts when upstream services fail. If your authentication service is down, you don't need separate alerts for every service that can't authenticate users.

Automation and Integration

Modern incident alert management relies heavily on automation to speed response times and reduce manual work.

Automated Escalation

Set up escalation chains that automatically notify backup responders if primary contacts don't acknowledge alerts within defined timeframes. This ensures critical issues never go unaddressed because someone's phone was on silent.

Self-Healing Actions

For common issues with known fixes, implement automated responses:

Restart crashed services

Scale up resources during traffic spikes

Clear cache when memory usage exceeds thresholds

Failover to backup systems

Always log automated actions and notify teams when self-healing occurs, so they can investigate root causes later.

Integration with Incident Management

Your alerting system should seamlessly integrate with your broader incident management workflow. When an alert fires, it should automatically:

Create an incident ticket with relevant context

Pull in recent logs and metrics

Notify stakeholders based on impact

Start recording timeline for post-mortems

For teams looking to modernize their approach, exploring next-gen incident management strategies for DevOps can provide valuable insights into building more resilient systems.

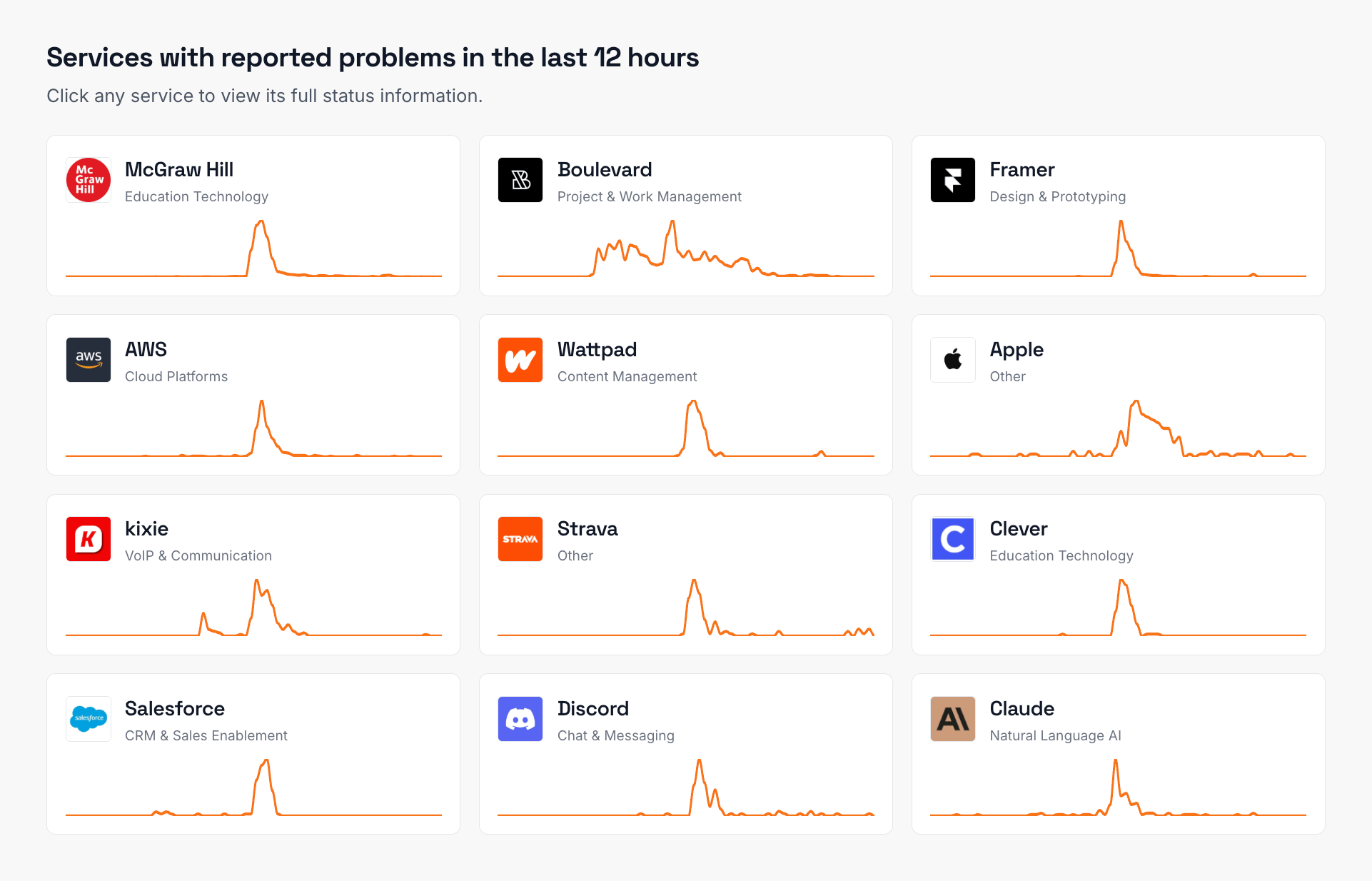

Monitoring External Dependencies

Your incident alerting system isn't complete if it only monitors internal services. Modern applications rely on dozens of external services, from payment processors to CDNs. When these fail, your users suffer even though your systems are running perfectly.

Implement external service monitoring that:

Tracks status pages of critical vendors

Alerts on vendor outages that could impact your services

Provides context about which features might be affected

Enables proactive communication with customers

Tools like IsDown aggregate status information from hundreds of services, allowing you to monitor all your dependencies from a single dashboard and receive alerts when vendors experience issues.

Alert Context and Runbooks

An alert without context is just noise. Every alert should include:

Essential Information

What service or component is affected

Current status and how it differs from normal

Potential user impact

Recent changes that might be related

Links to relevant dashboards and logs

Runbook Integration

Embed runbook links directly in alerts. When an engineer receives an alert at 3 AM, they shouldn't have to search for troubleshooting steps. Include:

Quick diagnostic commands

Common resolution steps

Escalation procedures

Rollback instructions if needed

Measuring Alert Effectiveness

You can't improve what you don't measure. Track these metrics to optimize your alerting system:

Alert accuracy: What percentage of alerts indicate real problems versus false positives?

Response time: How quickly do teams acknowledge and begin investigating alerts?

Resolution time: How long from alert to resolution?

Alert volume: Are certain services generating excessive alerts?

Coverage gaps: What incidents occurred without generating alerts?

These metrics directly influence your team’s Mean Time to Resolution (MTTR). For practical ways to improve it, see Creating an MTTR Reduction Strategy for Your SRE Team.

Building a Culture of Continuous Improvement

Effective incident alerting requires ongoing refinement. After each incident:

Review whether alerts fired appropriately

Identify any missing alerts that would have helped

Adjust thresholds based on lessons learned

Update runbooks with new troubleshooting steps

Share knowledge across teams

Encourage engineers to suggest alert improvements without fear of criticism. The engineer who gets woken up by a bad alert is best positioned to fix it.

Common Pitfalls to Avoid

As you build your incident alert management system, watch out for these common mistakes:

Over-alerting: Starting with too many alerts leads to fatigue. Begin with critical alerts and add more gradually.

Under-documenting: Alerts without context or runbooks waste precious response time.

Ignoring maintenance: Alert rules need regular updates as systems evolve.

Single points of failure: Ensure your alerting system itself is highly available and monitored.

Lack of testing: Regularly test alerts to ensure they still work as expected.

Looking Forward

Incident alerting continues to evolve with advances in machine learning and automation. Future systems will likely feature:

Predictive alerting that warns before failures occur

Natural language interfaces for querying alert history

Automated root cause analysis

Self-tuning thresholds based on historical data

The goal remains constant: getting the right information to the right people at the right time to minimize impact on users and business operations.

Frequently Asked Questions

What's the difference between incident alerting and monitoring?

Monitoring continuously collects data about system health and performance, while incident alerting specifically notifies teams when that data indicates a problem requiring attention. Monitoring is the constant observation; alerting is the tap on the shoulder when something needs fixing.

How many alerts should trigger for a single incident?

Ideally, just one primary alert should fire per incident, with related issues grouped together. Multiple alerts for the same problem create confusion and slow response times. Good incident alert management includes deduplication and correlation to minimize alert storms.

When should alerts wake someone up versus creating a ticket?

Alerts should only wake people for issues that can't wait until business hours and will significantly impact users or revenue if not addressed immediately. Everything else should create tickets for review during normal working hours. This distinction is crucial for preventing on-call burnout.

How do you handle alert fatigue in 24/7 operations?

Combat alert fatigue by implementing strict alert quality standards, rotating on-call duties regularly, providing adequate rest periods, and continuously tuning alerts to reduce false positives. Also ensure your incident alert management system supports flexible routing and escalation.

What's the ideal alert response time?

Response time varies by severity. Critical alerts should be acknowledged within 5 minutes, high-priority within 15 minutes, and medium-priority within an hour. However, these targets should align with your SLAs and business requirements rather than arbitrary standards.

Should every system component have alerts?

No, alert only on symptoms that directly impact users or indicate imminent failures. Alerting on every component creates noise and makes it harder to identify real problems. Focus on user-facing symptoms and leading indicators of major issues.

Nuno Tomas

Founder of IsDown

Nuno Tomas

Founder of IsDown

The Status Page Aggregator with Early Outage Detection

Unified vendor dashboard

Early Outage Detection

Stop the Support Flood

Related articles

Never again lose time looking in the wrong place

14-day free trial · No credit card required · No code required