Microservices Failures and Cascading Outages: Prevention Guide

Microservices architecture offers tremendous benefits for scalability and flexibility, but it also introduces new failure modes that can quickly spiral out of control. When one service fails in a distributed system, the impact can cascade across services like dominoes falling, creating widespread outages that affect your entire application. Understanding how these cascading failures occur and implementing the right defensive patterns is crucial for building resilient microservices.

Understanding Cascading Failures in Microservices

A cascading failure occurs when a problem in one microservice triggers failures in dependent services, creating a chain reaction throughout your distributed system. Unlike monolithic applications where failures tend to be isolated, microservices communicate constantly over the network, making them vulnerable to propagating errors.

Consider a typical e-commerce platform with separate microservices for user authentication, product catalog, inventory, and payment processing. If the inventory service becomes unresponsive due to high latency or database issues, the product service might start queuing requests. As these queues fill up, the product service itself becomes slow, affecting the frontend service, which then impacts user experience across the entire platform.

Common Triggers for Cascade Events

Several scenarios commonly trigger cascading outages in microservice architectures:

Resource exhaustion happens when one service consumes excessive CPU, memory, or network resources, starving other services on the same node. This is particularly dangerous in containerized environments where services share underlying resources.

Timeout misconfiguration creates problems when services have incompatible timeout settings. If Service A has a 30-second timeout calling Service B, but Service B has a 60-second timeout calling Service C, requests can pile up and exhaust connection pools.

Retry storms occur when services aggressively retry failed requests without exponential backoff. If 100 instances each retry a failed request 5 times immediately, the failing service suddenly faces 500 requests instead of 100, making recovery impossible.

Cache failures can trigger cascades when a cache service fails and all requests suddenly hit the database directly, overwhelming it with traffic it wasn't designed to handle. Similarly, a DNS outage can trigger a cascade by stopping services from finding each other.

Essential Patterns to Prevent Cascading Failures

Circuit Breaker Pattern

The circuit breaker pattern acts like an electrical circuit breaker, stopping calls to a failing service to give it time to recover. When error rates exceed a threshold, the circuit "opens" and immediately fails fast on new requests rather than waiting for timeouts.

Implement circuit breakers with three states:

Closed: Normal operation, requests pass through

Open: Service is failing, requests fail immediately

Half-open: Testing if service has recovered with limited requests

Configure your circuit breakers with sensible thresholds based on your service's normal error rates and response times.

Bulkhead Pattern

The bulkhead pattern isolates resources to prevent failures from spreading, similar to compartments in a ship's hull. If one section floods, bulkheads contain the damage.

In microservices, implement bulkheads by:

Dedicating separate thread pools for different service calls

Setting connection pool limits per downstream service

Using separate cache instances for critical vs non-critical data

Deploying services across multiple availability zones

Rate Limiting and Throttling

Rate limiting protects services from being overwhelmed by controlling request flow. Implement rate limiting at multiple levels:

Per-client limits prevent any single consumer from monopolizing resources. Use token bucket or sliding window algorithms to smooth traffic spikes.

Global limits protect the service from total overload regardless of source. Set these based on load testing and capacity planning.

Adaptive throttling dynamically adjusts limits based on system health metrics like CPU usage, queue depth, or response latency.

Smart Retry Strategies

Retries can either help or hurt during outages. Implement intelligent retry logic:

Exponential backoff increases delay between retries exponentially (1s, 2s, 4s, 8s...) to give failing services breathing room to recover.

Jitter adds randomness to retry timing to prevent synchronized retry storms where all clients retry simultaneously.

Retry budgets limit the total number of retries across all operations to prevent retry amplification.

Selective retries only retry on transient errors (network timeouts, 503 errors) not permanent failures (404, 401 errors).

Graceful Degradation Strategies

When failures occur, services should degrade gracefully rather than failing completely:

Feature flags allow you to disable non-critical features during incidents. If the recommendation service fails, show popular products instead of personalized ones.

Fallback responses provide cached or default data when real-time data is unavailable. Return last known good values or simplified responses.

Asynchronous processing moves non-critical operations to background queues. Accept orders even if inventory checks are slow, then validate asynchronously.

Partial responses return what you can rather than failing entirely. If user preferences fail to load, return core user data without customization.

Monitoring and Observability

You can't prevent what you can't see. Comprehensive monitoring helps detect cascade conditions before they spread:

Service mesh metrics track request rates, error rates, and latencies between all service pairs. Watch for sudden spikes or anomalies.

Dependency mapping visualizes service relationships and data flow. Tools like distributed tracing show how requests propagate through your architecture.

Health checks should test actual functionality, not just process liveness. A service might respond to pings while its database connection is broken.

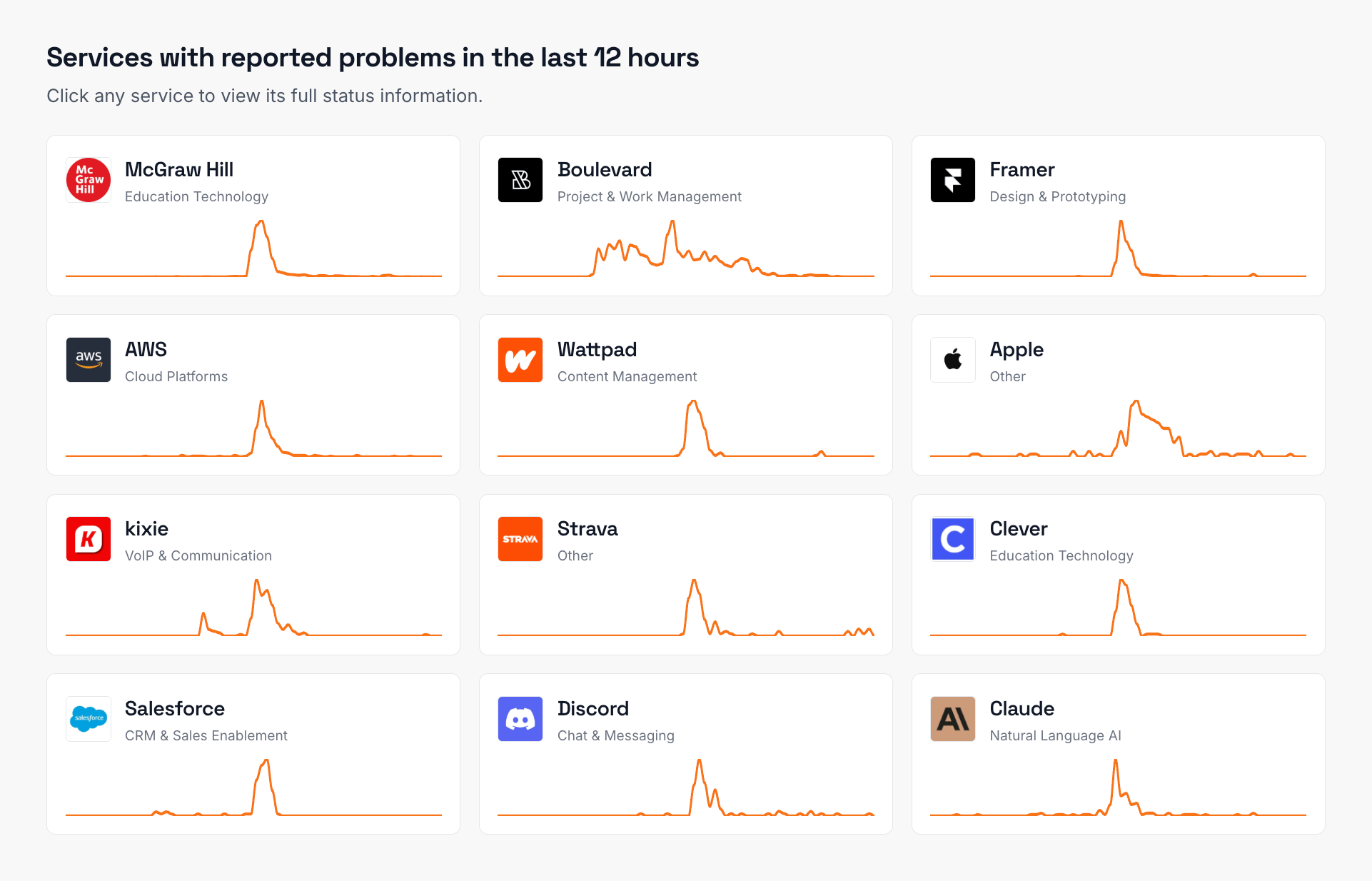

Synthetic monitoring continuously tests critical user journeys to detect problems before customers do. Pairing this with a status page aggregator gives you a unified view of external vendor incidents that might trigger cascading failures in your own services.

Real-World Implementation Tips

Start with the highest-risk services - those with the most dependencies or highest traffic. For example, a payment gateway outage can immediately halt revenue, making payment processing one of the most critical areas to protect.

Test your resilience patterns regularly through chaos engineering. Randomly inject failures, latency, and resource constraints to verify your defenses work as intended.

Document your service dependencies and failure modes. Create runbooks for common cascade scenarios so your team can respond quickly during incidents.

Implement gradual rollouts for configuration changes. Cascading failures often start with a seemingly innocent timeout adjustment or retry policy change.

Building Long-Term Resilience

Preventing cascading failures requires ongoing effort beyond initial implementation:

Regular architecture reviews help identify new dependencies and failure modes as your system evolves. What worked with 10 microservices might fail with 100.

Capacity planning ensures services have headroom for traffic spikes and retry storms. Plan for 2-3x normal load during partial outages.

Incident learning treats every cascade event as a learning opportunity. Conduct blameless post-mortems to understand root causes and improve defenses.

Service level objectives define acceptable failure rates and recovery times. Use error budgets to balance reliability with development velocity.

Building resilient microservices architectures requires thoughtful design and continuous refinement. By implementing these patterns and maintaining strong observability, you can prevent small failures from becoming major outages. Remember that in distributed systems, failures are inevitable - but cascading failures are preventable with the right approach.

For teams managing complex microservice deployments with multiple external dependencies, having visibility into third-party service health becomes crucial. When your microservices depend on external APIs, payment gateways, or cloud services, their failures can trigger cascades just as easily as internal service failures.

Frequently Asked Questions

What causes microservices failures and cascading outages most often?

The most common causes of microservices failures and cascading outages include timeout misconfigurations between services, retry storms without proper backoff strategies, resource exhaustion on shared infrastructure, and cache failures that suddenly overwhelm backend databases. These issues often compound when services lack proper circuit breakers or bulkhead isolation.

How do you implement the bulkhead pattern in microservices?

Implement the bulkhead pattern by isolating resources such as thread pools, connection pools, and compute resources for different service calls. For example, allocate separate thread pools for critical vs non-critical service calls, deploy services across multiple availability zones, and set strict connection limits per downstream service to prevent any single failure from consuming all available resources.

What's the difference between fail fast and graceful degradation?

Fail fast means immediately returning an error when a service is unavailable rather than waiting for timeouts, which prevents resource exhaustion and allows quick recovery. Graceful degradation goes further by providing reduced functionality instead of complete failure - like showing cached data, disabling non-essential features, or returning simplified responses when dependent services are down.

How do you prevent retry storms in distributed systems?

Prevent retry storms by implementing exponential backoff with jitter, which spaces out retries over increasing time intervals with random delays. Set retry budgets to limit total retry attempts, use circuit breakers to stop retrying failing services, and ensure all services in your architecture follow consistent retry policies to avoid amplification effects.

What metrics should you monitor to detect potential cascading failures?

Monitor error rates between all service pairs, queue depths and saturation levels, latency percentiles (especially p95 and p99), circuit breaker state changes, retry rates and retry budget consumption, and resource utilization across nodes. Set alerts on unusual patterns like sudden spikes in error rates or latency that could indicate an emerging cascade.

How does asynchronous communication help prevent cascading outages?

Asynchronous communication using message queues or event streams helps prevent cascading outages by decoupling services temporally. When services communicate asynchronously, a slow or failing downstream service doesn't block the upstream service. Messages queue up and can be processed when the service recovers, preventing thread exhaustion and allowing graceful handling of traffic spikes.

Nuno Tomas

Founder of IsDown

Nuno Tomas

Founder of IsDown

The Status Page Aggregator with Early Outage Detection

Unified vendor dashboard

Early Outage Detection

Stop the Support Flood

Related articles

Never again lose time looking in the wrong place

14-day free trial · No credit card required · No code required