Monitoring Serverless Applications: Complete Guide 2025

Serverless computing has revolutionized how we build and deploy applications, but monitoring serverless applications presents unique challenges that traditional monitoring approaches can't fully address. Without proper observability into your serverless functions, you're flying blind when performance issues arise or cold starts impact user experience.

This comprehensive guide explores the essential strategies, tools, and best practices for effectively monitoring serverless applications across AWS Lambda, Azure Functions, and Google Cloud Functions. Whether you're dealing with distributed tracing challenges or struggling to optimize invocation patterns, you'll learn how to gain complete visibility into your serverless architecture.

Why Monitoring Serverless Applications Is Different

Serverless environments operate fundamentally differently from traditional server-based applications. Your serverless functions execute on-demand, scale automatically, and disappear when not in use. This ephemeral nature creates several monitoring challenges:

Limited execution context: Functions run for milliseconds to minutes, making it difficult to capture meaningful metrics and logs before the execution environment vanishes.

Distributed architecture: Serverless applications typically consist of dozens or hundreds of small functions, each handling specific tasks. Tracing requests across these distributed components requires sophisticated monitoring solutions.

Cold start latency: When functions haven't been invoked recently, they experience cold starts that can significantly impact performance. Traditional monitoring tools often miss these sporadic latency spikes.

Vendor-specific constraints: Each cloud provider implements serverless differently, requiring platform-specific monitoring strategies for AWS Lambda, Azure Functions, or Google Cloud Functions.

In short, monitoring is essential for visibility into serverless applications, and without it, you risk missing issues that affect user experience across your entire serverless infrastructure.

Core Metrics for Serverless Monitoring

To effectively monitor serverless applications, focus on these essential metrics that provide insight into function health and performance:

Invocation Metrics

Track the number of function invocations to understand usage patterns and identify potential issues:

Total invocations: Monitor overall function activity

Concurrent executions: Ensure you're not hitting concurrency limits

Throttled invocations: Identify when requests are being rejected

Failed invocations: Catch errors before they impact users

Performance Metrics

Latency and duration metrics help optimize function performance:

Function duration: Track execution time to optimize code and reduce costs

Cold start frequency: Measure how often functions experience initialization delays

End-to-end latency: Monitor the complete request journey, not just function execution

Memory utilization: Right-size your functions for optimal performance

Cost Metrics

Serverless pricing models charge per invocation and duration, making cost monitoring critical:

Estimated charges: Track spending in real-time

Cost per function: Identify expensive operations

Memory-duration product: Optimize the balance between memory allocation and execution time

Essential Serverless Monitoring Tools

Choosing the right serverless monitoring tool depends on your cloud provider, application complexity, and observability requirements. Here are the leading options:

Cloud-Native Solutions

AWS CloudWatch provides native monitoring for AWS Lambda with automatic log collection, custom metrics, and CloudWatch Insights for log analysis. While it offers deep integration with AWS services, you'll need additional tools for distributed tracing.

Azure Monitor delivers comprehensive monitoring for Azure Functions, including Application Insights for application performance monitoring. It excels at tracking dependencies and providing end-to-end transaction views.

Google Cloud Monitoring offers robust observability for Google Cloud Functions with automatic trace collection and integrated logging. Its strength lies in seamless integration with other Google Cloud services.

Third-Party Monitoring Solutions

Specialized serverless monitoring tools provide advanced features and integrate into a third-party monitoring dashboard for unified visibility.

Datadog: Offers unified monitoring across multiple cloud providers with automatic instrumentation

New Relic: Provides deep insights into function performance with distributed tracing capabilities

Lumigo: Purpose-built for serverless with automatic distributed tracing and debugging features

Thundra: Focuses on application-level observability with advanced debugging capabilities

When evaluating tools, consider factors like automatic instrumentation, distributed tracing support, and integration with your existing monitoring infrastructure. For teams managing complex multi-cloud environments, building a multi-region monitoring strategy becomes essential for maintaining visibility across all serverless deployments.

Implementing Distributed Tracing

Distributed tracing is crucial for understanding how requests flow through your serverless architecture. AWS X-Ray, Azure Application Insights, and Google Cloud Trace provide native tracing capabilities, but implementing them effectively requires careful planning.

Setting Up Tracing

Start by instrumenting your functions to capture trace data:

Enable tracing in your function configuration

Add correlation IDs to track requests across services

Instrument external calls to databases, APIs, and other services

Capture custom segments for business-critical operations

Analyzing Trace Data

Use trace data to identify performance bottlenecks:

Service maps: Visualize dependencies between functions and services

Latency analysis: Identify slow components in request paths

Error tracking: Pinpoint where failures occur in distributed transactions

Building Effective Dashboards

A well-designed dashboard provides instant visibility into your serverless environment. Structure your dashboards to support different use cases:

Operations Dashboard

Create a high-level view for monitoring overall system health:

Function invocation rates and error percentages

Latency percentiles (p50, p95, p99)

Concurrent execution metrics

Cost trends and projections

Performance Dashboard

Focus on optimization opportunities:

Cold start frequency by function

Memory utilization patterns

Duration distribution histograms

Throttling and timeout incidents

Troubleshooting Dashboard

Enable rapid problem diagnosis:

Recent errors with stack traces

Anomaly detection alerts

Correlation between metrics and events

Log aggregation with search capabilities

Alerting Strategies for Serverless

Effective alerting helps you catch issues before they impact users. Design your alerts to be actionable and minimize false positives:

Critical Alerts

Set up immediate notifications for:

High error rates: When failure percentage exceeds thresholds

Throttling: When functions hit concurrency limits

Duration spikes: When execution time increases significantly

Cost anomalies: When spending exceeds budgets

Warning Alerts

Monitor trends that might indicate future problems:

Gradual latency increases

Rising cold start frequency

Memory pressure approaching limits

Unusual invocation patterns

Alert Routing

Automate alert delivery to the right teams:

Use severity levels to determine notification channels

Route alerts based on function ownership

Integrate with incident management platforms

Include runbook links in alert messages

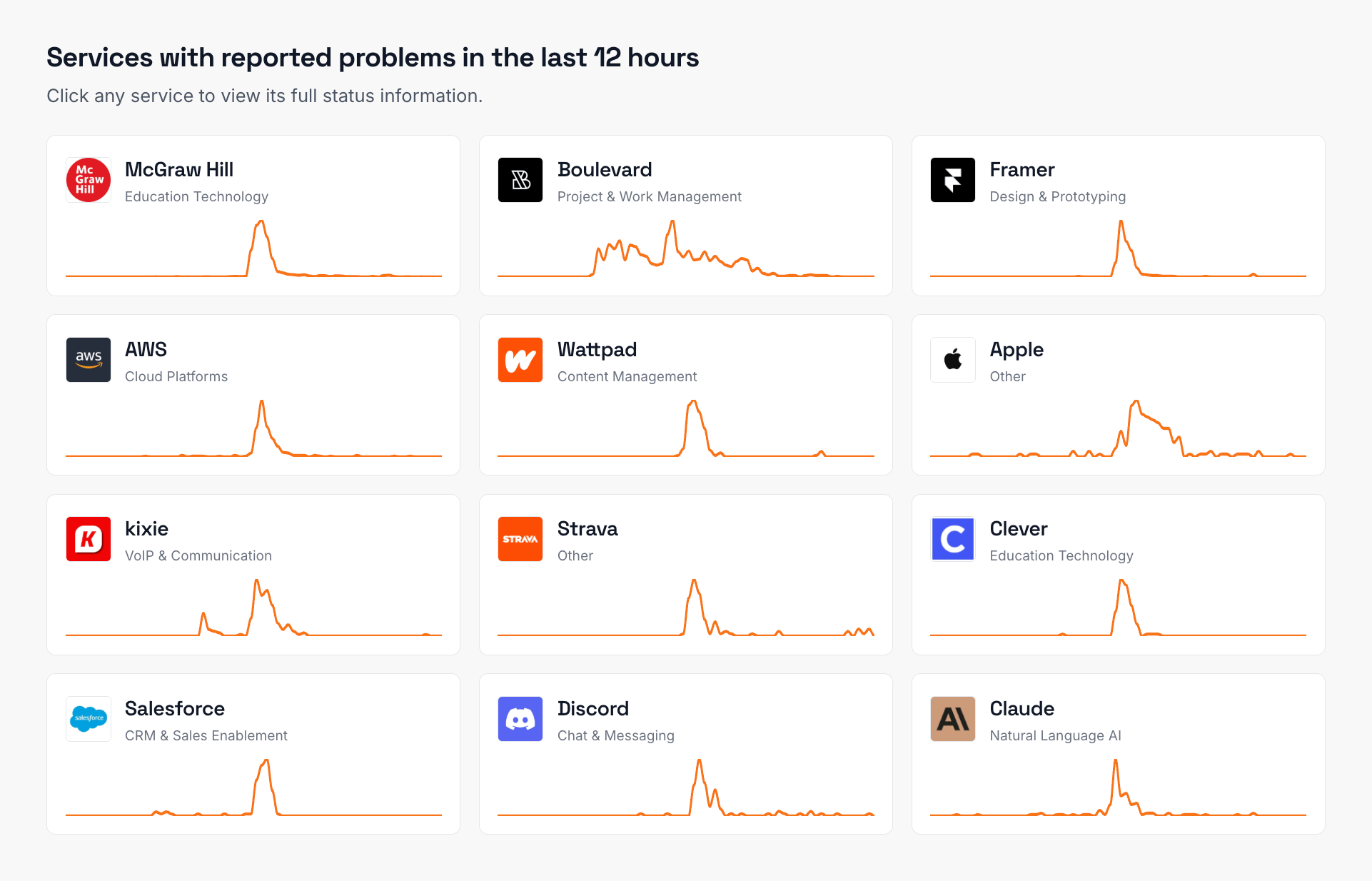

With well-structured alerts and thoughtful routing, serverless teams can stay ahead of problems, reduce mean time to resolution, and maintain reliable user experiences. Incorporating third-party outage monitoring adds another layer of resilience by covering the external services your applications depend on.

Optimizing Cold Starts

Cold starts remain one of the biggest challenges in serverless applications. Monitor and optimize them systematically:

Measuring Cold Start Impact

Track cold start metrics to understand their effect:

Frequency of cold starts per function

Additional latency introduced

Correlation with traffic patterns

Impact on user experience metrics

Reduction Strategies

Implement techniques to minimize cold starts:

Provisioned concurrency: Keep functions warm for critical paths

Scheduled warming: Use periodic invocations to prevent cooling

Code optimization: Reduce initialization time

Runtime selection: Choose faster-starting runtimes

Log Management Best Practices

Logs provide essential context for troubleshooting serverless applications. Implement structured logging to maximize their value:

Structured Logging

Adopt consistent log formats:

Use JSON for machine-readable logs

Include correlation IDs in every log entry

Add contextual metadata (function name, version, request ID)

Standardize error logging across functions

Log Aggregation

Centralize logs for easier analysis:

Stream logs to a central platform

Set up log retention policies

Create indexes for common queries

Implement log sampling for high-volume functions

Cost Management

Control logging costs in high-scale environments:

Use log levels appropriately (ERROR, WARN, INFO, DEBUG)

Implement dynamic log level adjustment

Archive old logs to cheaper storage

Monitor log volume metrics

Security Monitoring

Serverless applications require specialized security monitoring:

Access Monitoring

Track who's invoking your functions:

Monitor IAM role usage

Track API Gateway authentication

Alert on unauthorized access attempts

Audit function permission changes

Runtime Security

Detect potential security issues:

Monitor for unusual execution patterns

Track external network connections

Alert on privilege escalation attempts

Scan for vulnerable dependencies

Performance Optimization Through Monitoring

Use monitoring data to continuously improve performance:

Identify Optimization Opportunities

Analyze metrics to find improvement areas:

Functions with high execution time

Memory over-provisioning or under-provisioning

Inefficient database queries

Unnecessary external API calls

Measure Optimization Impact

Track improvements after changes:

Compare before/after performance metrics

Monitor cost reduction

Verify error rates remain stable

Validate user experience improvements

Integrating with Existing Monitoring Infrastructure

Serverless monitoring shouldn't exist in isolation. For organizations already using monitoring platforms, automating triage using external monitoring signals can help correlate serverless issues with broader system problems.

Unified Observability

Create a holistic view of your system:

Correlate serverless metrics with traditional infrastructure

Link function performance to business KPIs

Integrate with existing alerting workflows

Maintain consistent monitoring standards

Future-Proofing Your Serverless Monitoring

As serverless platforms evolve, your monitoring strategy must adapt:

Emerging Trends

Stay ahead of monitoring challenges:

Edge computing: Monitor functions running at edge locations

Multi-cloud deployments: Unified monitoring across providers

AI-powered insights: Automated anomaly detection and root cause analysis

Cost optimization: Predictive cost modeling and optimization recommendations

Continuous Improvement

Regularly review and enhance your monitoring:

Audit dashboard effectiveness quarterly

Update alerts based on incident reviews

Evaluate new monitoring tools and features

Train teams on monitoring best practices

Frequently Asked Questions

What metrics are most important when monitoring serverless applications?

The most critical metrics for serverless monitoring include invocation count, error rate, duration, cold start frequency, and concurrent executions. These metrics provide insights into function health, performance issues, and potential bottlenecks. Cost-related metrics like estimated charges per function are also essential for budget management.

How do I effectively monitor AWS Lambda functions?

Start with AWS CloudWatch for basic metrics and logs, then add AWS X-Ray for distributed tracing. Configure custom metrics for business-specific KPIs and set up CloudWatch alarms for critical thresholds. Consider third-party tools like Datadog or New Relic for advanced features and cross-service correlation.

What's the best way to handle cold starts in serverless monitoring?

Monitor cold start frequency and duration using custom metrics or specialized monitoring tools. Implement provisioned concurrency for critical functions, use scheduled warming for predictable traffic patterns, and optimize your code to reduce initialization time. Track the correlation between cold starts and user experience metrics.

How can I reduce monitoring costs for high-volume serverless applications?

Implement log sampling for high-frequency functions, use appropriate log levels, and set retention policies based on data value. Aggregate metrics at the source before sending to monitoring platforms, and consider using cloud-native monitoring solutions that offer better pricing for serverless workloads.

Which serverless monitoring tool should I choose for multi-cloud environments?

For multi-cloud serverless deployments, consider vendor-agnostic tools like Datadog, New Relic, or Lumigo that provide unified monitoring across AWS, Azure, and Google Cloud. These tools offer standardized dashboards and alerting regardless of the underlying cloud provider.

How do I implement distributed tracing across serverless functions?

Use correlation IDs to track requests across functions, enable native tracing services (AWS X-Ray, Azure Application Insights), and instrument your code to capture custom trace segments. Ensure all functions in a request path are properly instrumented and use trace analysis tools to visualize the complete request flow.

Nuno Tomas

Founder of IsDown

Nuno Tomas

Founder of IsDown

The Status Page Aggregator with Early Outage Detection

Unified vendor dashboard

Early Outage Detection

Stop the Support Flood

Related articles

Never again lose time looking in the wrong place

14-day free trial · No credit card required · No code required