Observability vs Monitoring vs Telemetry: Key Differences

Modern software systems are no longer built as a single unit. They are made up of many moving parts that run across multiple services, environments, and teams. This setup makes it harder to keep track of system behavior and detect issues quickly. Relying on traditional monitoring is no longer enough. While it helps track performance and alerts you when something breaks, it doesn't explain why the problem happened or where it started. That's where telemetry, monitoring, and observability come in. These three concepts work together to give better visibility into system health and performance. They help teams detect problems earlier, understand the impact faster, and resolve them more effectively. In this article, we'll explore the differences between observability, monitoring, and telemetry. You'll learn how each one works, how they connect, and why using them together is key to managing today's complex systems.

What Is Telemetry?

Telemetry plays a critical role in modern observability and infrastructure monitoring. It serves as the foundation that powers both monitoring and observability. Without it, there would be no data points to track, analyze, or troubleshoot. Telemetry involves the collection and transmission of data from systems, devices, or services to a central platform. This process happens automatically and gives teams insights into system behavior. It also supports real-time visibility, which is especially important in distributed systems.

The Role of Telemetry in System Data Collection

Telemetry gathers data from various sources, including applications, servers, cloud platforms, and IoT devices. The process does not require manual input, making monitoring applications and large infrastructures efficient. Types of telemetry data commonly collected include:

- Metrics, like CPU usage or memory load

- Logs, which record events and system activities

- Traces, which follow requests as they move through different services

This collected data powers monitoring tools to detect known issues and enables observability platforms to explore system problems in depth. In short, telemetry provides the raw data needed to track system health and performance.

Examples of Telemetry in Modern Systems

Telemetry is used in both software and hardware environments. Here are some real-world examples:

- In cloud platforms, it tracks system performance and helps ensure service availability.

- In performance monitoring, telemetry identifies error rates and slow services.

- In remote areas, such as satellites or industrial machines, telemetry sends data from remote locations to a central system.

- In containerized setups, telemetry tools like OpenTelemetry collect logs and metrics from applications in Kubernetes.

These examples show that telemetry is flexible. It works across simple setups and complex systems.

Advantages and Disadvantages of Telemetry

Advantages: * Enables real-time data collection and quick issue detection * Supports automation and reduces manual work * Works well across distributed systems and complex environments

Disadvantages: * Can lead to data overload without proper filtering * May cause storage and processing challenges in high-volume systems * Requires strong security measures, as telemetry may include sensitive data from multiple sources

Telemetry is essential for effective monitoring and gaining a full view of system health, but it must be managed wisely to avoid risks or inefficiencies.

What Is Monitoring?

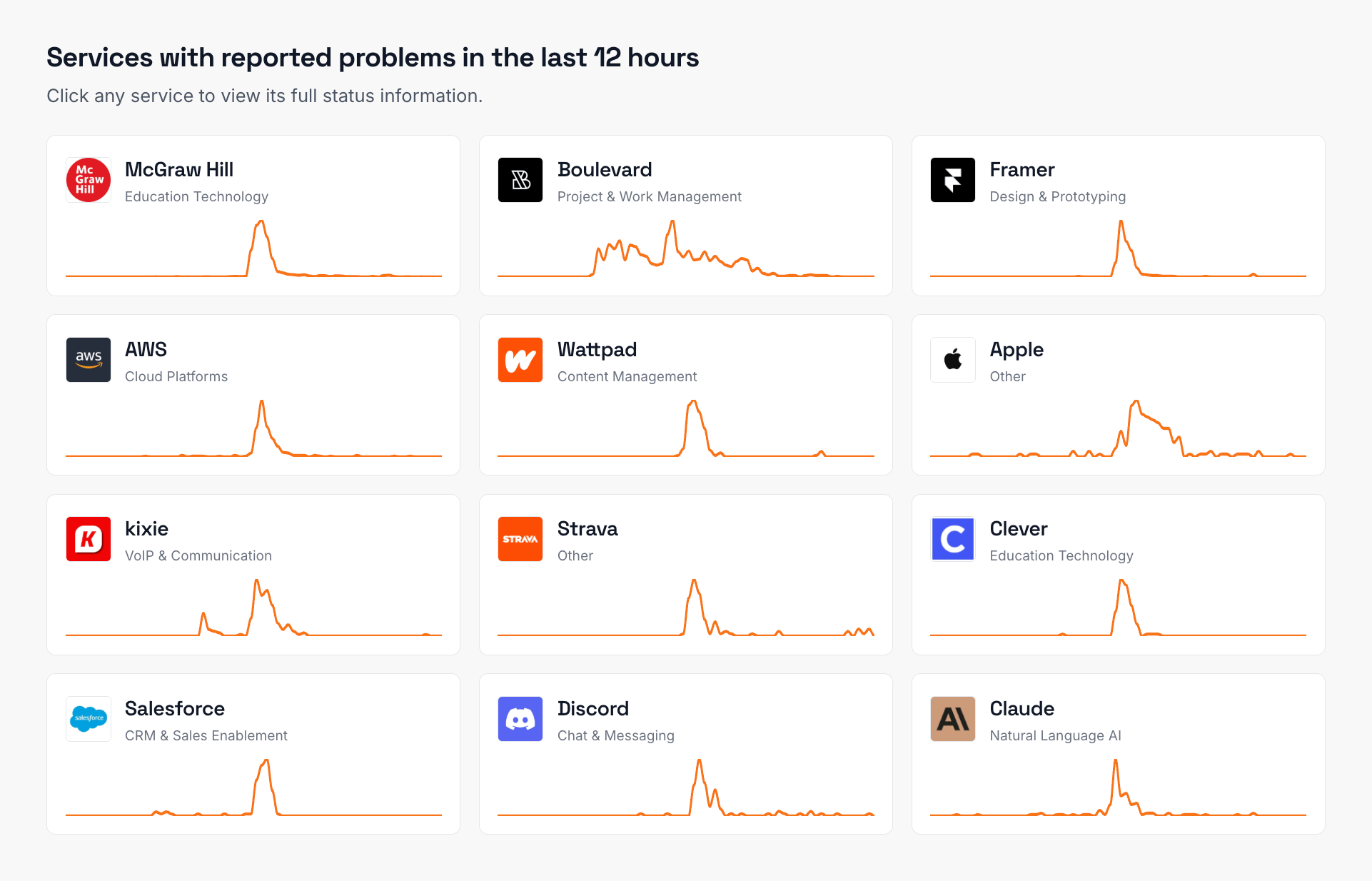

Monitoring is the process of collecting and analyzing data from operations and infrastructures. It helps teams track how systems are running and identify issues that need to be fixed. For example, monitoring the responsiveness of a service can involve measuring network factors like connectivity and latency. A status page can be used to display the real-time performance of these services, allowing users to stay informed about issues such as connectivity problems or latency spikes. This is a key part of network monitoring and application monitoring. The value of monitoring comes from its ability to detect problems early and analyze long-term trends. By watching key metrics over time, teams can understand how their systems are performing, how demand is growing, and how resources are being used. This makes monitoring crucial for improving system reliability and planning ahead.

What Monitoring Tracks in a System

Monitoring focuses on tracking various metrics that show how well a system is working. These include:

- Latency (how long it takes to respond to a request)

- CPU and memory usage

- System uptime

- Error rates and response times

When a metric crosses a limit, the system notifies operators so they can take action. These alerts are often shown on dashboards for easy visualization. Monitoring works best when you're looking for known issues, such as performance drops, resource overuse, or availability problems. It helps teams maintain system stability and plan ahead by revealing usage trends and potential bottlenecks.

Advantages and Disadvantages of Monitoring

Advantages: * Monitoring helps detect problems early through availability monitoring and alert thresholds, which reduces downtime. * Supports performance tuning by helping teams find and fix performance issues, making the most of available resources. * Contributes to stability by ensuring systems stay within safe limits, an essential part of effective monitoring.

Disadvantages: * May not provide enough context to fully understand complex systems or the cause of a failure. * Too many alerts can lead to alert fatigue, especially if they include false positives or non-critical issues. * May lack support for newer environments, limiting insight into fast-changing systems.

While monitoring offers strong support for stability and performance, it works best when paired with observability, which offers deeper insights into system behavior.

What Is Observability?

Observability is the ability to understand the internal state of a system by analyzing external outputs like logs, metrics, and traces. It goes beyond monitoring by helping teams not only detect a problem but also find out why it happened and how to fix it. This deeper view is essential in today's distributed systems, where apps are built with many moving parts across different environments, including monitoring external services that interact with your system. Observability allows teams to identify issues proactively and get a full picture of how different components are connected. Instead of guessing or running tests, they can explore data in real time and trace problems to the root cause. This makes modern observability a powerful approach for maintaining performance, stability, and system reliability.

What Makes a System Observable

A system becomes observable when it produces enough useful output, such as logs, metrics, and traces, to reveal what's going on inside. These three data types are often called the three pillars of observability. Together, they help teams understand how services interact and how data flows between them. In microservices and distributed systems, direct inspection isn't always possible. That's why observability is important; it gives teams insights into system behavior without needing full access to every component. For instance, during an API outage, observability helps teams quickly identify and trace the problem, ensuring a faster resolution. The goal is to uncover problems early, diagnose them quickly, and prevent them from happening again.

Advantages and Disadvantages of Observability

Advantages: * Observability provides deeper insights into how systems behave, making fixing issues at their source easier. * Supports faster decision-making and adaptive problem-solving. * Works with a wide range of data from multiple sources, which leads to a better analysis system view. * Helps improve the end-user experience by resolving problems before users even notice them.

Disadvantages: * Setting up a proper observability system can be challenging. It often requires custom instrumentation and careful planning. * Collecting and processing data from different environments can become costly, especially at scale. * Without the right observability tools, teams may struggle to turn raw data into clear insights.

Observability is not just about having more data. It's about having the right data and knowing how to use it.

The Three Pillars of Observability

To achieve proper observability, a system must collect and analyze three core types of data: metrics, logs, and traces. These are often referred to as the three pillars of observability. Each plays a different role in helping teams understand how a system performs, what went wrong, and why it happened. When used together, these data types give a complete view of system behavior and performance, especially in distributed systems.

Metrics: Measuring System Health Over Time

Metrics are numbers that track the health of a system. Common metrics include memory usage, CPU load, request count, and error rates. These data points help teams monitor performance changes over time. Monitoring tools use them to set alerts when performance goes above or below a safe threshold. Metrics are key for performance monitoring and long-term planning. Metrics are easy to store and visualize, making them one of the most used parts of any monitoring solution.

Logs: Recording What Happened and When

Logs are text-based records that describe events happening inside a system. They show when something occurred, what action was taken, and what the result was. Logs help teams understand the full story behind a problem. They provide insights by offering details that metrics alone can't show. Logs are especially useful for tracing user actions or reviewing errors. Collecting logs from various sources gives a deeper view of how systems behave during failures or unexpected changes in complex environments.

Traces: Understanding How Components Interact

Traces follow the path of a request as it moves through different services. They show which components were involved, how long each step took, and where delays or failures occurred. They're used in root cause analysis, helping teams pinpoint which part of the system caused a slowdown or error. Traces are also helpful for performance optimization, revealing bottlenecks and improving overall system flow.

Observability vs Monitoring vs Telemetry: What's the Difference?

Though often used together, observability, monitoring, and telemetry serve different purposes in managing system health. Each plays a specific role and supports the others in forming a complete approach to system management. Understanding the differences between observability, monitoring, and telemetry helps teams choose the right tools and design better strategies for performance, stability, and issue resolution.

Key Differences in Purpose, Scope, and Proactivity

| Aspect | Telemetry | Monitoring | Observability |

|---|---|---|---|

| Purpose | Telemetry gathers data from various sources and sends it to a central system. | Monitoring focuses on tracking system performance using predefined metrics. | Observability provides deeper insights into system behavior and uncovers unknown issues. |

| Data Types | Metrics, logs, traces | Mostly metrics | Combines metrics, logs, and traces |

| Tools | Telemetry tools like OpenTelemetry, Fluentd | Monitoring tools like Prometheus, Zabbix | Observability platforms like Grafana, Honeycomb |

| Questions Answered | What data is being collected and from where? | What is happening in the system right now? | Why is the system behaving this way? |

| Reactive vs Proactive | Foundational | Mostly reactive | Highly proactive and diagnostic |

To simplify:

- Telemetry collects the raw data

- Monitoring uses that data to detect known problems

- Observability offers the tools to explore and understand the root cause

This layered approach is how teams achieve effective monitoring and true observability in modern, distributed systems.

Key Questions Each Concept Answers: How They Work Together

One of the easiest ways to understand observability, monitoring, and telemetry is by looking at the questions each concept helps answer. In modern IT environments, these questions guide teams in detecting, investigating, and solving system issues.

1) Telemetry: How Is the Data Gathered?

Telemetry involves the automatic collection and transmission of data from systems, applications, and devices to a central location. It works silently in the background, sending data from various sources such as servers, containers, and cloud services. This data includes metrics, logs, and traces. It gives developers the raw material needed for monitoring tools and observability platforms to work. Without telemetry, systems would have no way of sharing what's really happening under the hood.

2) Monitoring – What Is Happening Right Now?

Monitoring focuses on tracking real-time system metrics and alerts teams when something goes wrong. It answers questions like:

- Is the system up?

- Are response times slowing down?

- Is CPU or memory usage too high?

By analyzing telemetry data to track predefined thresholds, monitoring helps teams respond quickly to known issues and keep services stable. It's a key part of availability monitoring and maintaining the state of a system.

3) Observability: Why Is the System Behaving This Way?

While monitoring tells you that something is wrong, observability provides deeper insights into system behavior and performance. It helps answer questions like:

- Why did the system slow down?

- Where did the error originate?

- What chain of events caused the issue?

Observability is the ability to explore data from logs, metrics, and traces to find root causes, especially in distributed systems where failures aren't always obvious. It supports analyzing system functions that reveal both patterns and outliers in behavior. This makes observability a powerful tool for reducing downtime, improving decision-making, and enhancing system reliability.

Workflow of These Three: Telemetry, Monitoring, & Observability

Here's how these three work together in a simple flow:

1) Telemetry collects and sends data from various sources, like servers, applications, and cloud services. 2) Monitoring uses this data to track key metrics and alert teams when something goes wrong. 3) Observability provides deeper insights by analyzing logs, metrics, and traces to find the root cause of the problem.

This flow helps teams move from data collection to issue detection, and then to full diagnosis and resolution.

Conclusion

The move toward observability shows how systems today are more complex than ever. It reflects a deeper need for smarter tools that can not only detect problems but help teams truly understand them. Telemetry, monitoring, and observability each play a part in this. Telemetry collects the raw data. Monitoring checks for issues using that data. Observability digs deeper to explain why the issue happened. Together, they give a complete picture of your system's health. If you're aiming for better visibility and faster response times, now is the right time to act. Contact us today and explore how IsDown's monitoring services can help your team stay informed, reduce downtime, and take control of incidents before they impact your users.

Frequently Asked Questions

What Is Observability in Simple Terms?

Observability is the ability to understand what's happening inside a system by analyzing data it produces, like logs, metrics, and traces. Instead of only knowing that something is wrong, observability helps you find out why it's happening. It goes beyond monitoring by offering a full view of how the system works, even in complex environments.

What Is the Difference Between Telemetry and Metrics?

Telemetry data refers to all types of information automatically collected and sent from a system, including metrics, logs, and traces. Metrics are just one type of telemetry, numbers that show system performance, like CPU usage or error rates. So, telemetry provides data, and metrics are one piece of that bigger picture.

What Is the Difference Between Observability and Telemetry?

Telemetry collects and transmits data from your systems, while observability is the ability to use that data to understand what's going wrong. Think of telemetry as the source of raw data, and observability as the process of analyzing that data to find deeper answers. So, telemetry provides data, and observability works to make sense of it.

What Is the Difference Between Observability and Monitoring?

Monitoring is the traditional approach that tracks known issues using alerts and dashboards. It tells you when something is wrong. Observability goes beyond monitoring by helping you understand why something is wrong, even if it's a new or unknown issue. Monitoring is more about detection; observability is about investigation.

Nuno Tomas

Founder of IsDown

Nuno Tomas

Founder of IsDown

The Status Page Aggregator with Early Outage Detection

Unified vendor dashboard

Early Outage Detection

Stop the Support Flood

Related articles

Never again lose time looking in the wrong place

14-day free trial · No credit card required · No code required