Is Coveralls Down?

Coveralls status updated a few minutes ago

What is Coveralls status right now?

Coveralls is having a minor outage

Stop being the last to know when Coveralls goes down

14-day trial · No credit card required · 5-min setup

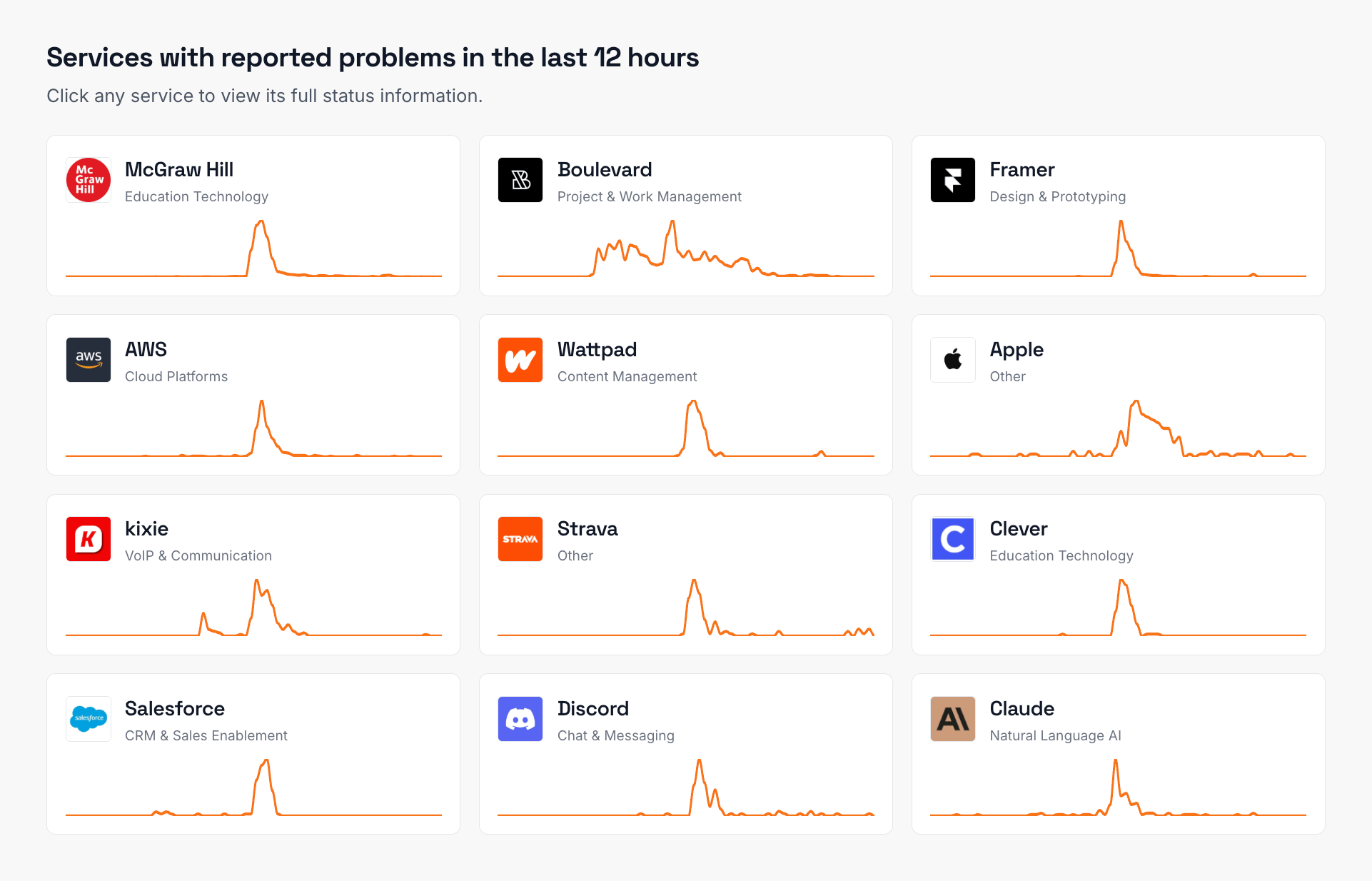

Coveralls service health over the last 24 hours

This chart shows the number of user-reported issues for Coveralls service health over the past 24 hours, grouped into 20-minute intervals. It's normal to see occasional reports, which may be due to individual user issues rather than a broader problem.

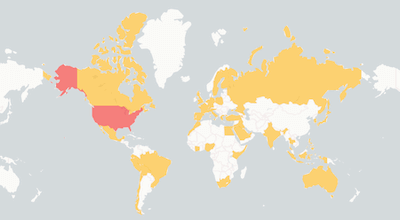

Coveralls Outage Map

See where users report Coveralls is down. The map shows recent Coveralls outages from around the world.

Coveralls Outage MapMonitor Coveralls status and outages

- Monitor all your external dependencies in one place

- Get instant alerts when outages are detected

- Be the first to know if service is down

- Show real-time status on private or public status page

- Keep your team informed

Coveralls Downtime Health — Last 90 Days

In the last 90 days, Coveralls had 3 incidents with a median duration of 1 hour 42 minutes.

Incidents

Major Outages

Minor Incidents

Median Resolution

Recent Coveralls outages detected by IsDown

Full incident reports for recent Coveralls outages, including timelines, affected components, and resolution details.

| Title | Started At | Duration |

|---|---|---|

|

We are currently investigating this issue.

|

Feb 24, 2026 at 10:52 PM UTC

|

Ongoing |

|

We are continuing to experience elevated latency in APAC and EU.

|

Dec 19, 2025 at 02:34 PM UTC

|

about 1 hour |

|

We had an incident overnight that entailed several server outages affecting APAC and EU users. Th...

|

Dec 18, 2025 at 02:51 PM UTC

|

about 2 hours |

|

We received reports from EU customers of elevated latency and have resolved the cause. We will be...

|

Nov 17, 2025 at 04:35 PM UTC

|

about 4 hours |

|

We are currently investigating this issue.

|

Nov 10, 2025 at 04:14 PM UTC

|

about 3 hours |

|

We received reports of 504 Gateway Timeouts from our Web Servers (coverage report uploads) betwee...

|

Oct 24, 2025 at 06:35 PM UTC

|

about 3 hours |

|

We have received some reports of 504 errors this morning. We are monitoring to avoid further occu...

|

Oct 13, 2025 at 06:02 PM UTC

|

about 6 hours |

|

We've received results of 504 Timeout errors from 7am PDT through 8:15am PDT. We have addressed t...

|

Oct 10, 2025 at 03:23 PM UTC

|

about 4 hours |

|

All systems operational.

During our US overnight hours (PDT / GMT-7), we received additional rep...

|

Sep 26, 2025 at 03:28 PM UTC

|

3 days |

|

We’re currently seeing elevated reports of 504 Timeout errors affecting some customers on a subse...

|

Sep 03, 2025 at 06:00 PM UTC

|

21 days |

Get alerts when Coveralls is down

- Monitor all your external dependencies in one place

- Get instant alerts when outages are detected

- Be the first to know if service is down

- Show real-time status on private or public status page

- Keep your team informed

Coveralls Components Status

Check if any Coveralls component is down. View the current status of 7 services and regions.

| Component | Status |

|---|---|

| Coveralls.io API | OK |

| Coveralls.io Web | OK |

| GitHub | OK |

| Pusher Presence channels | OK |

| Pusher WebSocket client API | OK |

| Stripe API | OK |

| Travis CI API | OK |

About Coveralls Status and Outage Monitoring

IsDown has monitored Coveralls continuously since April 2020, tracking this Code Quality & Testing for 6 years. Over that time, we've documented 118 outages and incidents, averaging 1.7 per month. When Coveralls goes down, incidents typically resolve within 101 minutes based on historical data.

We monitor Coveralls's official status page across 7 components. IsDown interprets Coveralls statuses (operational, degraded performance, partial outage, and major outage) to deliver precise health metrics and filter alerts by the components you actually use.

To check if Coveralls is down, IsDown combines official status data with user reports for early detection. Vendors often delay acknowledging problems, so user reports help us alert you before the official announcement.

Engineering and operations teams rely on IsDown to track Coveralls status and receive verified outage alerts through Slack, Teams, PagerDuty, or 20+ other integrations.

How IsDown Monitors Coveralls

IsDown checks Coveralls's status page every few minutes, across all 7 components. We combine official status data with user reports to detect when Coveralls is down, often before the vendor announces it.

When Coveralls status changes, IsDown sends alerts to your preferred channels. Filter by severity to skip noise and focus on outages that affect your business.

What you get when monitoring Coveralls with IsDown

Track Coveralls incidents and downtimes by severity

IsDown checks Coveralls official status page for major/minor outages or downtimes. A major outage is when Coveralls experiences a critical issue that severely affects one or more services/regions. A minor incident is when Coveralls experiences a small issue affecting a small percentage of its customer's applications. An example is the performance degradation of an application. The moment we detect a Coveralls outage, we send you an alert and update your dashboard and status page.

Get alerted as soon as users report problems with Coveralls

Coveralls and other vendors don't always report outages on time. IsDown collects user reports to provide early detection of outages. This way, even without an official status update, you can stay ahead of possible problems.

All the details of Coveralls outages and downtimes

IsDown collects all information from the outages published in Coveralls status page to provide the context of the outage. If available, we gather the title, description, time of the outage, status, and outage updates. Another important piece of information is the affected services/regions which we use to filter the notifications that impact your business.

Prepare for upcoming Coveralls maintenance events

Coveralls publishes scheduled maintenance events on their status page. IsDown collects all the information for each event and creates a feed that people can follow to ensure they are not surprised by unexpected downtime or problems. We also send the feed in our weekly report, alerting the next maintenances that will take place.

Only get alerted on the Coveralls outages that impact your business

IsDown monitors Coveralls and all their 7 components that can be affected by an outage. IsDown allows you to filter the notifications and status page alerts based on the components you care about. For example, you can choose which components or regions affect your business and filter out all other outages. This way you avoid alert fatigue in your team.

How IsDown Works

Get started in minutes. Monitor all your critical services from one place.

We monitor official status pages

IsDown checks the status of over 6,020+ services every few minutes. We aggregate official status information and normalize it, plus collect crowdsourced reports for early outage detection.

You get notified instantly

When we detect an incident or status change, you receive an alert immediately. Customize notifications by service, component, or severity to avoid alert fatigue.

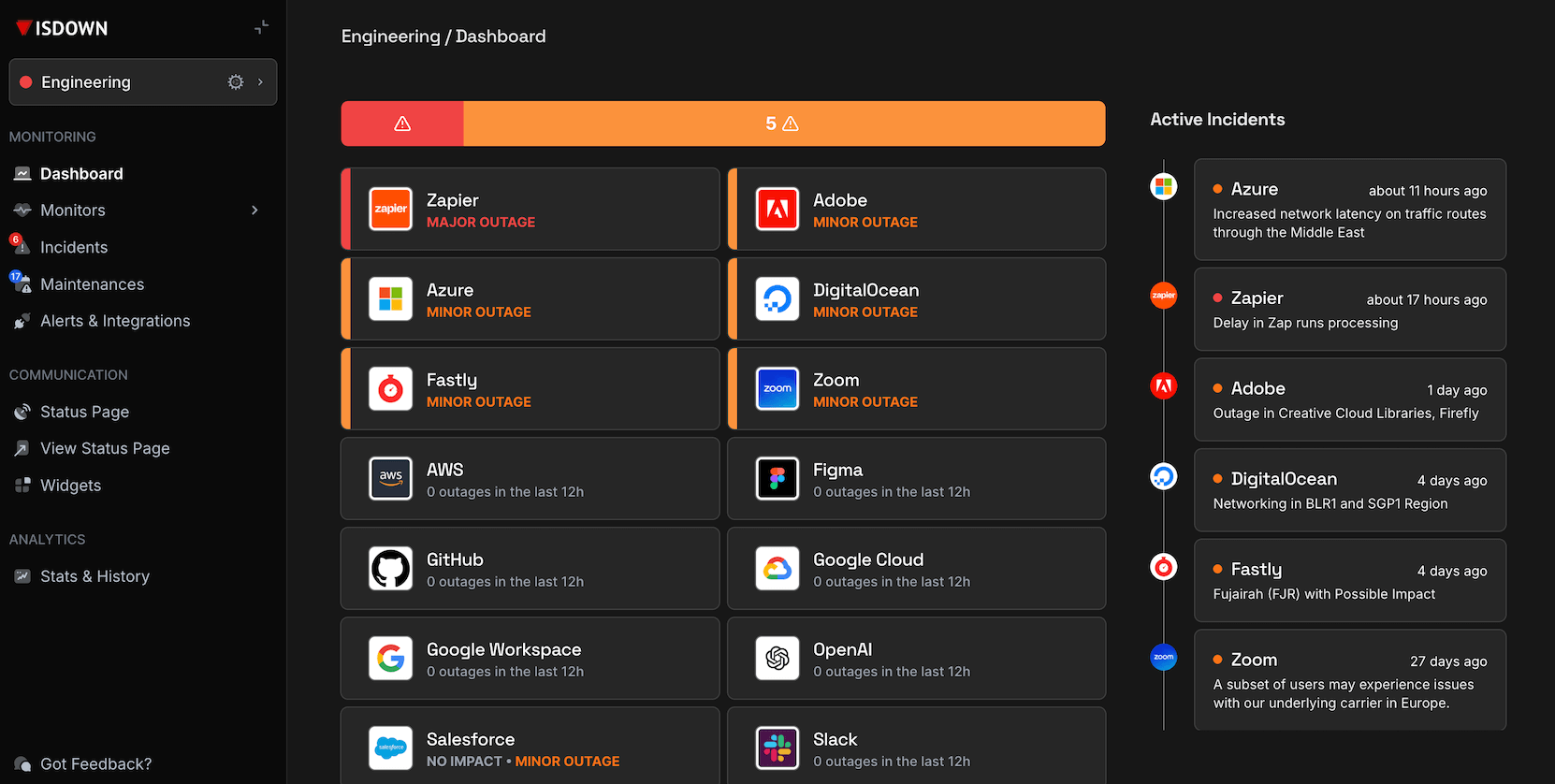

Everything in one dashboard

View all your services in a unified dashboard or public or private status page. Send alerts to Slack, Teams, PagerDuty, Datadog, and 20+ other tools your team already uses.

No credit card required • 14-day free trial

Coveralls Downdetector vs IsDown

Looking for Coveralls outage alerts like Downdetector? IsDown monitors Coveralls's official status page for verified incident data, not just user reports. Know exactly when Coveralls is down, which components are affected, and when service health is restored. Here's why official Coveralls status monitoring beats crowdsourced detection:

| Features | IsDown | Downdetector |

|---|---|---|

| Official Coveralls status page monitoring. Know when Coveralls is down with real time incident details. | ||

| Monitor 6,020+ services including Coveralls in a single dashboard. | ||

| Instant Coveralls outage alerts sent to Slack, Teams, PagerDuty, and more. | ||

| Combined monitoring: Coveralls official status plus user reports for early outage detection. | ||

| Maintenance feed for Coveralls | ||

| Granular alerts filtering by Coveralls components and regions. |

14-day free trial • No credit card required

Frequently Asked Questions

Is Coveralls down today?

Coveralls isn't down. You can check Coveralls status and incident details on the top of the page. IsDown continuously monitors Coveralls official status page every few minutes. In the last 24 hours, there were 0 outages reported.

What is the current Coveralls status?

Coveralls is currently operational. You can check Coveralls status and incident details on the top of the page. The status is updated in almost real-time, and you can see the latest outages and issues affecting customers.

Is there a Coveralls outage now?

Yes, there is an ongoing outage. You can check the details on the top of the page.

Is Coveralls down today or just slow?

Yes, Coveralls might be slow as there's an ongoing outage. You can check the details on the top of the page.

How are Coveralls outages detected?

IsDown monitors the Coveralls official status page every few minutes. We also get reports from users like you. If there are enough reports about an outage, we'll show it on the top of the page.

Is Coveralls having an outage right now?

Coveralls last outage was on February 24, 2026 with the title "Service outage (RESTORED, MONITORING)"

How often does Coveralls go down?

IsDown has tracked 118 Coveralls incidents since April 2020. When Coveralls goes down, incidents typically resolve within 101 minutes.

Is Coveralls down for everyone or just me?

Check the Coveralls status at the top of this page. IsDown combines official status page data with user reports to show whether Coveralls is down for everyone or if the issue is on your end.

What Coveralls components does IsDown monitor?

IsDown monitors 7 Coveralls components in real-time, tracking the official status page for outages, degraded performance, and scheduled maintenance.

How to check if Coveralls is down?

- Subscribe (if possible) to updates on the official status page.

- Create an account in IsDown. Start monitoring Coveralls and get alerts in real-time when Coveralls has outages.

Why use IsDown to monitor Coveralls instead of the official status page?

Because IsDown is a status page aggregator, which means that we aggregate the status of multiple cloud services. You can monitor Coveralls and all the services that impact your business. Get a dashboard with the health of all services and status updates. Set up notifications via Slack, Datadog, PagerDuty, and more, when a service you monitor has issues or when maintenances are scheduled.

How IsDown compares to DownDetector when monitoring Coveralls?

IsDown and DownDetector help users determine if Coveralls is having problems. The big difference is that IsDown is a status page aggregator. IsDown monitors a service's official status page to give our customers a more reliable source of information instead of just relying on reports from users. The integration allows us to provide more details about Coveralls's Outages, like incident title, description, updates, and the parts of the affected service. Additionally, users can create internal status pages and set up notifications for all their third-party services.

Latest Articles from our Blog

Monitor Coveralls status and get alerts when it's down

14-day free trial · No credit card required · No code required