AWS CloudFront Outage (Feb 2026): Timeline, Cascade, and Lessons

TL;DR: On February 10, 2026, AWS CloudFront experienced DNS resolution failures that cascaded across 8 AWS services and impacted 20+ downstream platforms — from Salesforce and Adobe to Discord and Claude. The acute DNS issue was resolved within about an hour; full recovery of change propagation took until ~4:00 AM UTC. IsDown users reported problems 23 minutes before AWS's first status update, giving teams using crowd-sourced monitoring a head start on their incident response.

What Happened

At approximately 9:15 PM UTC on February 10, 2026, Amazon CloudFront began returning NXDOMAIN responses for DNS queries against specific distributions. In practical terms: DNS was telling users that services behind those distributions simply didn't exist.

The root cause was a DNS resolution failure within CloudFront's infrastructure that quickly spread to eight interconnected AWS services:

| AWS Service | Impact |

|---|---|

| Amazon CloudFront | DNS failures (NXDOMAIN), followed by change propagation delays |

| Amazon Route 53 | DNS resolution failures |

| Amazon API Gateway | Increased error rates and latencies |

| AWS WAF | Errors due to CloudFront dependency |

| AWS AppSync | Increased error rates |

| Amazon Pinpoint | Delivery failures |

| AWS Transfer Family | Increased errors |

| Amazon VPC Lattice | Connectivity issues |

Credit to AWS: their team identified the root cause quickly, and DNS resolution was largely restored within an hour. The longer tail — change propagation delays affecting new distributions, DNS records, and TLS certificate provisioning — took until approximately 4:00 AM UTC on February 11 to fully clear. Existing distributions continued serving traffic normally during that recovery window.

The Timeline

Here's how it unfolded:

| Time (UTC) | What Happened |

|---|---|

| ~8:52 PM | IsDown users start reporting AWS problems — 23 minutes before the official status update |

| 9:15 PM | AWS acknowledges: "DNS resolution failures for some specific CloudFront distributions" |

| 9:40 PM | Root cause confirmed — NXDOMAIN responses, mitigation underway across the fleet |

| 9:46 PM | Early recovery signs observed |

| 9:58 PM | Significant DNS recovery, but change propagation still delayed |

| 10:34 PM | Full DNS recovery confirmed. Propagation delays persist. |

| 11:06 PM | Continued mitigation. Cache invalidation confirmed unaffected. |

| 12:02 AM | Parallel mitigation paths underway — 2-3 hour recovery estimate |

| 1:01 AM | Striped mitigation in progress. Revised 2-hour estimate. |

| 2:06 AM | Recovery taking longer than expected. Another 2-hour estimate. |

| 3:06 AM | Configuration updates successful. ~60 minutes to full recovery. |

| ~4:00 AM | Fully resolved. |

AWS provided regular updates throughout the incident — roughly every 30-60 minutes — which is better communication than many vendors offer during outages. The challenge for downstream teams wasn't a lack of information from AWS. It was the 23-minute gap between when the problem started and when the first update appeared.

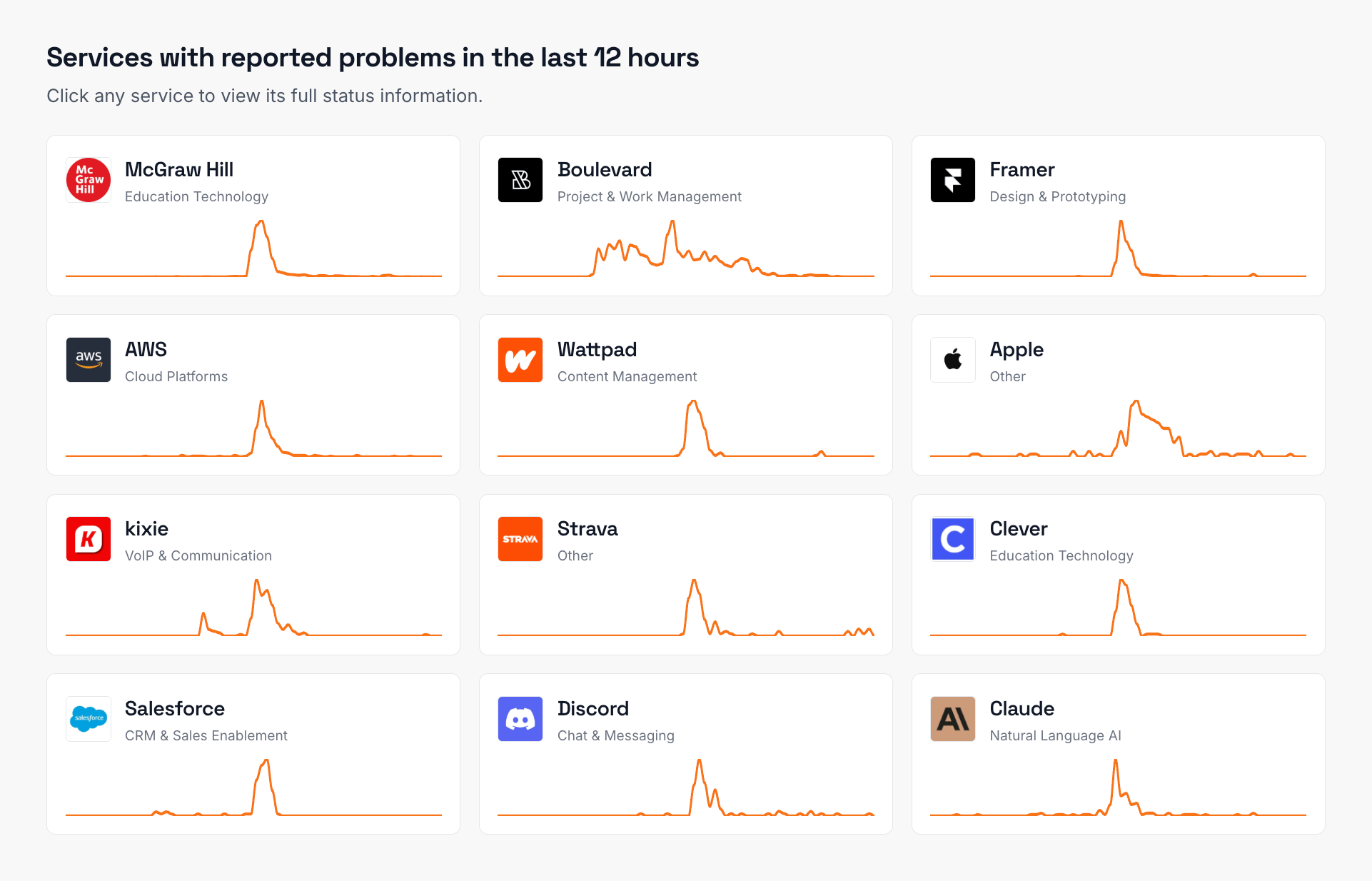

The Cascade: 20+ Services Impacted

This is what makes cloud infrastructure outages so tricky. AWS CloudFront isn't just a CDN — it's infrastructure that other infrastructure depends on. When CloudFront's DNS broke, services built on top of it felt the impact too.

IsDown tracked 20+ services that showed problems at the same time as the AWS incident:

| Category | Services With Concurrent Issues |

|---|---|

| Business & Enterprise | Salesforce, TechnologyOne, UKG, Kixie |

| Developer Tools | Framer, TestRail, Adobe |

| AI & Communication | Claude, Discord |

| Healthcare | DrFirst |

| Education | McGraw Hill, Clever, SchooLinks, Compass Education |

| Content & Social | Reddit, Wattpad |

| Other | Apple, Strava, Steam, Yoti |

Not all of these necessarily depend on CloudFront directly — some may rely on services that rely on CloudFront, creating a second or third layer of dependency. That's the nature of the modern cloud: cascading effects are the norm, not the exception. It's nobody's fault — it's the architecture.

Pro Tip: Categorize your vendor dependencies into tiers. Tier 1 (direct infrastructure: AWS, GCP, Cloudflare) should have the tightest monitoring — you want to know about issues within minutes, not when your customers start filing tickets.

Why 23 Minutes Matters

Twenty-three minutes doesn't sound like much. But in incident response, those are the most valuable minutes you have.

Here's what a typical response timeline looks like during a vendor outage:

- Detection — How quickly do you know there's a problem?

- Triage — Is it you or them?

- Communication — Do customers know what's happening?

- Mitigation — Can you fail over, cache, or degrade gracefully?

If your detection starts when a vendor publishes their first status update, you've lost time. Not because the vendor is slow — internal verification before public communication is responsible engineering. But your team doesn't need to wait for that verification.

With crowd-sourced detection — where thousands of users monitoring the same services surface anomalies collectively — your team gets a signal at 8:52 PM instead of 9:15 PM. That's 23 minutes to check your dashboards, confirm the impact on your services, and start communicating to your own customers before most teams are even aware something is off.

Key Insight: The fastest way to detect a vendor outage isn't to poll their status page — it's to listen to the collective signal from everyone else who depends on them too.

Lessons for Engineering Teams

1. Map Your CloudFront Dependencies

Many teams don't realize how much of their stack runs through CloudFront. If you serve assets, APIs, or entire applications via CloudFront distributions, a CloudFront DNS failure directly affects your availability.

Action item: Audit which of your services use CloudFront. Document them. Know what breaks when CloudFront has issues — before it happens.

2. Don't Monitor Through the Same Infrastructure You Depend On

This outage was a DNS failure — Route 53 and CloudFront DNS both had issues. If your monitoring also runs through Route 53 for DNS resolution, your monitoring may be blind to the very failure it should catch.

Action item: Use monitoring that checks from multiple providers and locations, not just the same infrastructure your services run on.

3. Plan for the Recovery Tail

The DNS issue resolved in about an hour. The change propagation recovery took several more hours. This is a common pattern — the acute issue resolves relatively fast, but full recovery takes longer as systems propagate changes and clear backlogs.

Action item: Your incident communication plan should account for extended recovery windows, not just the initial impact. Have templates ready for "the vendor has fixed the root cause but recovery is still in progress." Your customers will appreciate the transparency.

4. Monitor Your Dependencies, Not Just Your Infrastructure

If you're only monitoring your own servers, databases, and endpoints, you didn't know about this outage until it showed up in your error rates. Twenty services were affected because they all shared an upstream dependency.

IsDown monitors 5,850+ services and aggregates status from official pages and crowd-sourced reports. When AWS has issues, you know — often before the official status page is updated. You can route those alerts directly to Slack, PagerDuty, or your incident management tool.

5. Outages Happen — Preparation Beats Prevention

AWS runs some of the most reliable infrastructure on the planet, and they still have outages. Every cloud provider does. Every SaaS product does. The question isn't whether your vendors will have incidents — it's whether your team will know about it in minutes or in hours.

The teams that handled this outage well are the ones that had their dependency map documented, their runbooks ready, and their monitoring covering both their own infrastructure and their vendors.

The Bigger Picture: Cloud Concentration Risk

This outage illustrates cloud concentration risk in action. When a single provider's DNS layer has issues, the blast radius extends beyond their own services to every company built on top of them.

The services affected on February 10 span healthcare (DrFirst), education (McGraw Hill, Clever), HR (UKG), CRM (Salesforce), developer tools (TestRail, Adobe), and AI (Claude). One DNS failure in CloudFront, and they all felt it.

You don't need to go multi-cloud to manage this risk (that introduces its own complexity). But you do need visibility into your upstream dependencies so your team can respond quickly when — not if — the next incident happens.

Frequently Asked Questions

What caused the AWS outage on February 10, 2026?

A DNS resolution failure within Amazon CloudFront caused NXDOMAIN responses for specific CloudFront distributions. This propagated to 8 AWS services including Route 53, API Gateway, WAF, AppSync, Pinpoint, Transfer Family, and VPC Lattice. AWS identified the root cause quickly and restored DNS within about an hour. Change propagation delays took until approximately 4:00 AM UTC on February 11 to fully clear.

Which services were affected by the AWS CloudFront outage?

Beyond the 8 AWS services directly impacted, 20+ downstream platforms reported concurrent issues — including Salesforce, Discord, Claude, Adobe, McGraw Hill, UKG, and others across cloud, developer tools, education, healthcare, and communication categories.

How long did the AWS outage last?

The outage had two phases. The acute DNS resolution failures lasted approximately 1 hour (9:15 PM to ~10:34 PM UTC). The recovery phase for change propagation delays extended until approximately 4:00 AM UTC on February 11. During the recovery phase, existing distributions continued working — the delays affected new distributions, DNS changes, and TLS certificate provisioning.

How did IsDown detect the outage before AWS's status page?

IsDown aggregates data from official status pages and crowd-sourced user reports. User reports of AWS problems appeared on IsDown 23 minutes before AWS published their first official status update. This isn't a criticism of AWS — internal verification before public communication is responsible practice. Crowd-sourced monitoring simply provides an earlier signal by aggregating reports from thousands of users watching the same services.

How can I prepare my team for the next AWS outage?

Map your CloudFront and AWS dependencies, ensure your monitoring doesn't run solely through the infrastructure you're monitoring, prepare communication templates for multi-phase outages (acute incident + extended recovery), and set up monitoring that tracks your upstream vendors. Monitor your AWS dependencies with IsDown to get early alerts from both official status updates and crowd-sourced reports.

Is multi-cloud the solution to avoid AWS outage impact?

Multi-cloud can reduce single-provider risk, but it introduces significant complexity in operations, data consistency, and cost. For most teams, better monitoring and faster response is more practical than full multi-cloud redundancy. Know your dependencies, have a response plan, and make sure you hear about vendor issues as early as possible.

Nuno Tomas

Founder of IsDown

Nuno Tomas

Founder of IsDown

The Status Page Aggregator with Early Outage Detection

Unified vendor dashboard

Early Outage Detection

Stop the Support Flood

Related articles

Never again lose time looking in the wrong place

14-day free trial · No credit card required · No code required