Is Buttondown Down? Buttondown Status & Outages

Buttondown status updated based on recent crowdsourced reports

What is Buttondown status right now?

Buttondown is working normally

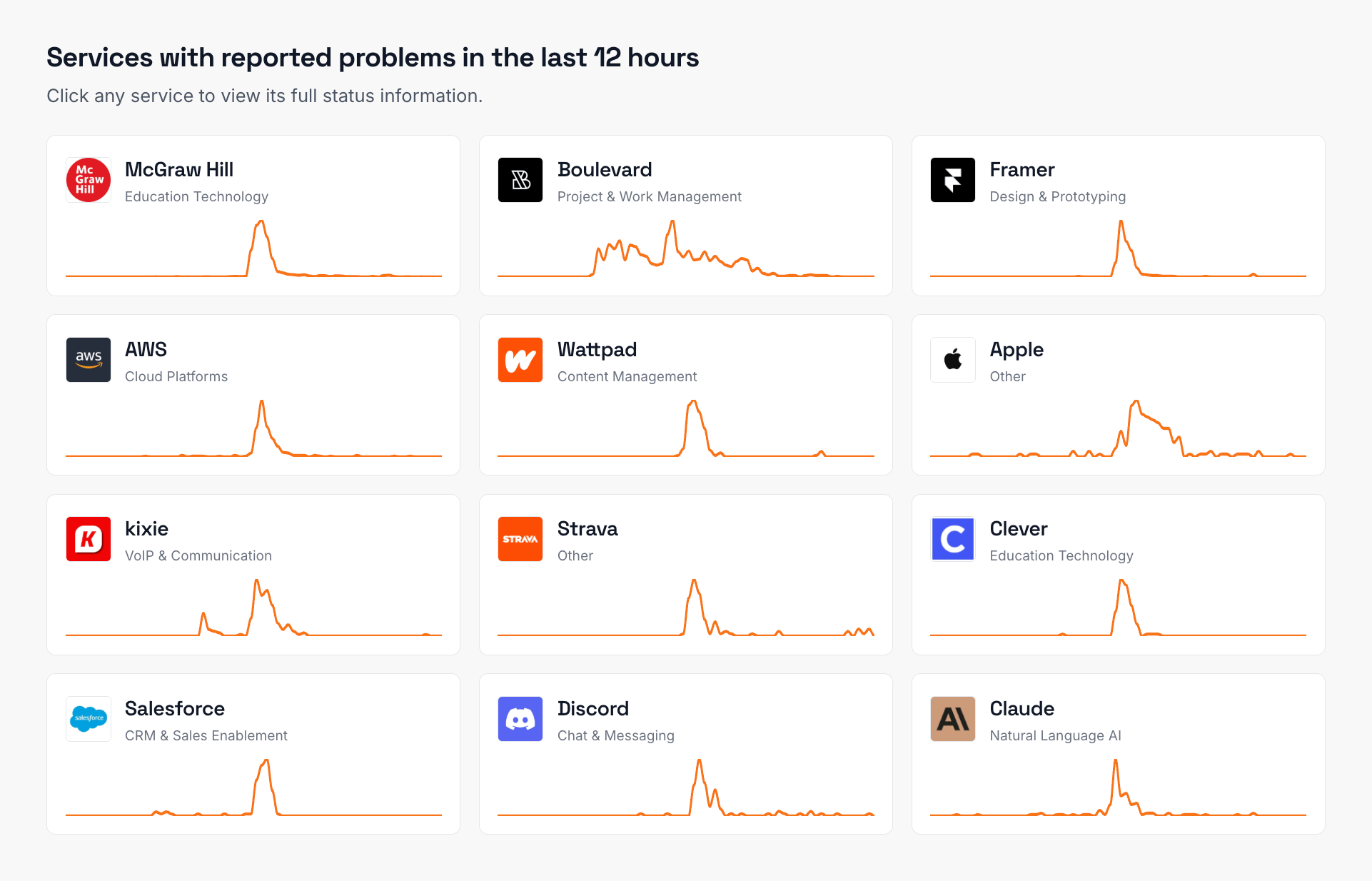

Buttondown service health over the last 24 hours

This chart shows the number of user-reported issues for Buttondown service health over the past 24 hours, grouped into 20-minute intervals. It's normal to see occasional reports, which may be due to individual user issues rather than a broader problem.

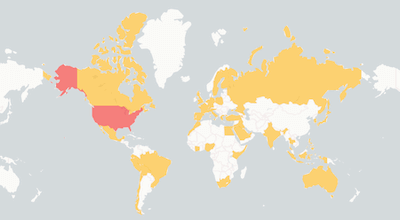

Outage Map

See where users report Buttondown is down. The map shows recent Buttondown outages from around the world.

Buttondown Outage Map

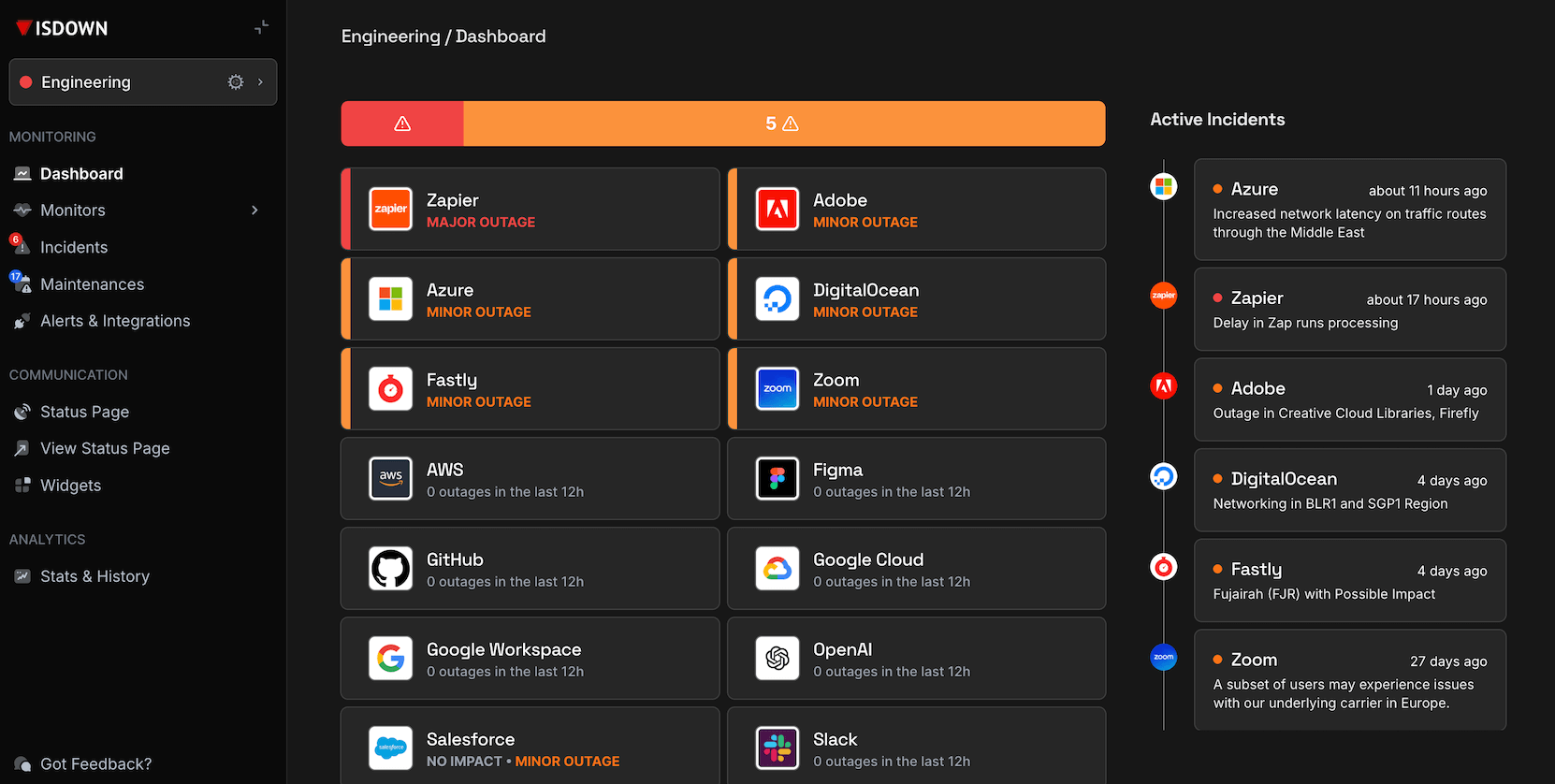

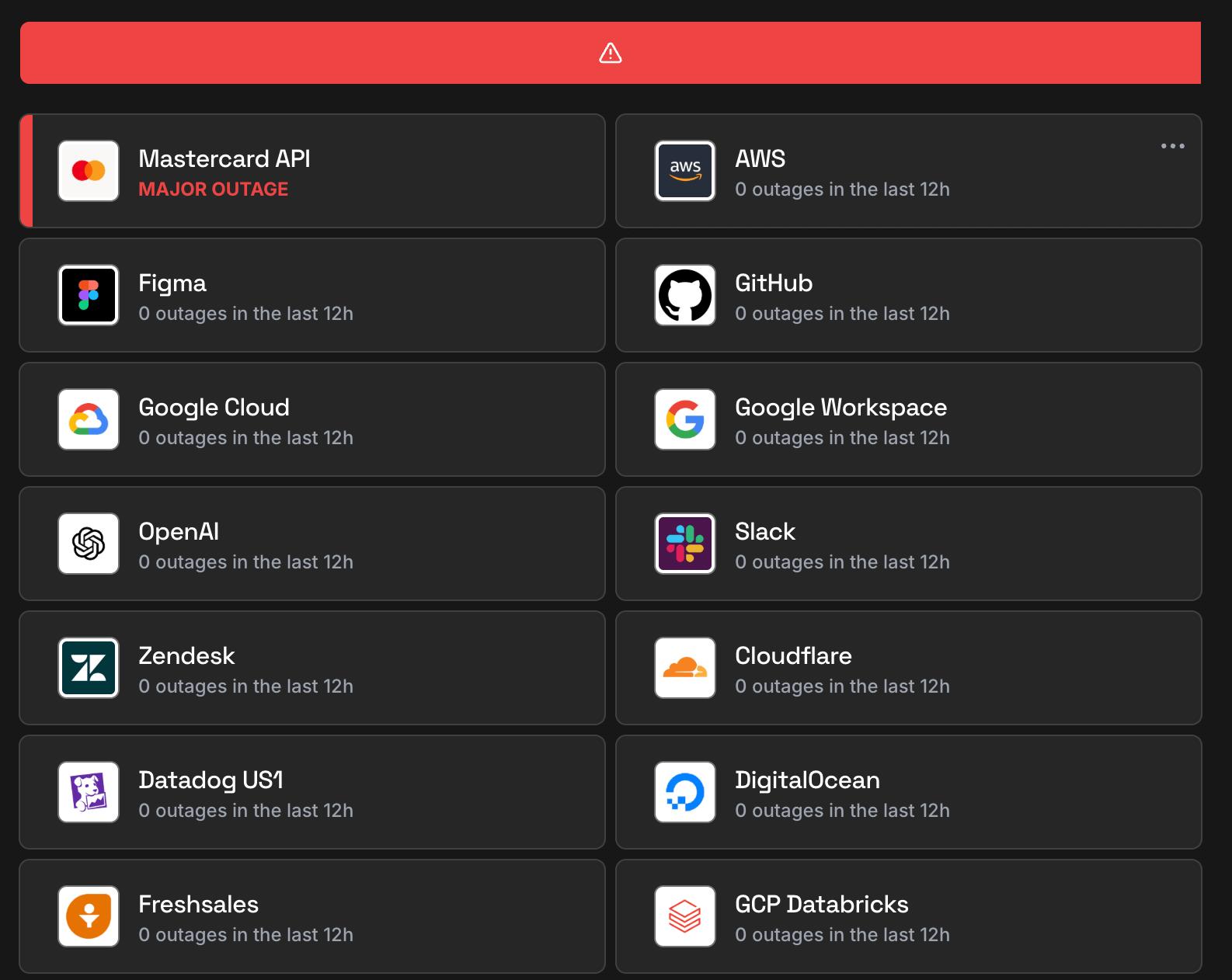

Recent Buttondown outages detected by IsDown

Full incident reports for recent Buttondown outages, including timelines, affected components, and resolution details.

| Title | Started At | Duration |

|---|---|---|

|

Jul 07, 2025 at 04:17 AM UTC

|

8 minutes | |

|

Jul 06, 2025 at 01:53 PM UTC

|

40 minutes | |

|

Jun 30, 2025 at 12:11 PM UTC

|

8 minutes | |

|

We've identified that our hosting provider was incorrectly identifying incoming traffic as being ...

|

Jun 23, 2025 at 07:51 PM UTC

|

6 days |

|

Jun 23, 2025 at 06:27 PM UTC

|

20 minutes | |

|

Jun 23, 2025 at 12:15 PM UTC

|

12 minutes | |

|

Our upstream hosting provider, Heroku, is experiencing an ongoing outage. As a result:

|

Jun 10, 2025 at 02:36 PM UTC

|

1 day |

|

Jun 09, 2025 at 04:00 PM UTC

|

8 minutes | |

|

May 30, 2025 at 10:57 AM UTC

|

8 minutes | |

|

May 24, 2025 at 11:03 AM UTC

|

8 minutes |

Buttondown

+ 6,019 other services

Know the moment your vendors go down

Stop checking status pages manually. Get instant alerts to your team's tools.

14-day trial · No credit card · 5-min setup

About Buttondown Status and Outage Monitoring

IsDown has monitored Buttondown continuously since January 2021, tracking this Email Marketing for 5 years. Over that time, we've documented 21 outages and incidents, averaging 0.3 per month.

To check if Buttondown is down, IsDown combines official status data with user reports for early detection. Vendors often delay acknowledging problems, so user reports help us alert you before the official announcement.

Engineering and operations teams rely on IsDown to track Buttondown status and receive verified outage alerts through Slack, Teams, PagerDuty, or 20+ other integrations.

How IsDown Monitors Buttondown

IsDown checks Buttondown's status page every few minutes, across all 9 components. We combine official status data with user reports to detect when Buttondown is down, often before the vendor announces it.

When Buttondown status changes, IsDown sends alerts to your preferred channels. Filter by severity to skip noise and focus on outages that affect your business.

How IsDown Works

Get started in minutes. Monitor all your critical services from one place.

We monitor official status pages

IsDown checks the status of over 6,020+ services every few minutes. We aggregate official status information and normalize it, plus collect crowdsourced reports for early outage detection.

You get notified instantly

When we detect an incident or status change, you receive an alert immediately. Customize notifications by service, component, or severity to avoid alert fatigue.

Everything in one dashboard

View all your services in a unified dashboard or public or private status page. Send alerts to Slack, Teams, PagerDuty, Datadog, and 20+ other tools your team already uses.

No credit card required • 14-day free trial

Frequently Asked Questions

Is Buttondown down today?

Buttondown isn't down. You can check Buttondown status and incident details on the top of the page. IsDown continuously monitors Buttondown official status page every few minutes. In the last 24 hours, there were 0 outages reported.

What is the current Buttondown status?

Buttondown is currently operational. You can check Buttondown status and incident details on the top of the page. The status is updated in almost real-time, and you can see the latest outages and issues affecting customers.

Is there a Buttondown outage now?

No, there is no ongoing official outage. Check on the top of the page if there are any reported problems by other users.

Is Buttondown down today or just slow?

Currently there's no report of Buttondown being slow. Check on the top of the page if there are any reported problems by other users.

How are Buttondown outages detected?

IsDown monitors the Buttondown official status page every few minutes. We also get reports from users like you. If there are enough reports about an outage, we'll show it on the top of the page.

Is Buttondown having an outage right now?

Buttondown last outage was on July 07, 2025 with the title "Some services are down"

How often does Buttondown go down?

IsDown has tracked 21 Buttondown incidents since January 2021.

Is Buttondown down for everyone or just me?

Check the Buttondown status at the top of this page. IsDown combines official status page data with user reports to show whether Buttondown is down for everyone or if the issue is on your end.

What Buttondown components does IsDown monitor?

IsDown monitors 9 Buttondown components in real-time, tracking the official status page for outages, degraded performance, and scheduled maintenance.

How IsDown compares to DownDetector when monitoring Buttondown?

IsDown and DownDetector help users determine if Buttondown is having problems. The big difference is that IsDown is a status page aggregator. IsDown monitors a service's official status page to give our customers a more reliable source of information instead of just relying on reports from users. The integration allows us to provide more details about Buttondown's Outages, like incident title, description, updates, and the parts of the affected service. Additionally, users can create internal status pages and set up notifications for all their third-party services.

Latest Articles from our Blog

Monitor Buttondown status and get alerts when it's down

14-day free trial · No credit card required · No code required

Get instant alerts when Buttondown goes down