Essential DevOps Metrics and KPIs for Team Success

DevOps metrics and KPIs serve as the compass for engineering teams navigating the complex landscape of modern software delivery. Without proper measurement, teams operate blindly, unable to identify bottlenecks, celebrate wins, or justify investments in process improvements. The right metrics provide objective data that transforms gut feelings into actionable insights.

The Four Key Metrics in DevOps

The DevOps Research and Assessment (DORA) team identified four key metrics that correlate strongly with high-performing technology organizations. These metrics have become the gold standard for measuring DevOps performance across industries.

1. Deployment Frequency

Deployment frequency measures how often your team successfully releases code to production. High-performing DevOps teams deploy multiple times per day, while lower performers might deploy monthly or even less frequently.

This metric reflects your team's ability to deliver value continuously. Frequent deployments indicate:

Smaller, more manageable changes

Reduced risk per deployment

Faster feedback loops

Higher confidence in the deployment process

2. Lead Time for Changes

Lead time measures the duration from code commit to code successfully running in production. Elite performers achieve lead times of less than one hour, while low performers might take months.

Shorter lead times enable:

Rapid response to market demands

Quick bug fixes and security patches

Improved developer satisfaction

Better competitive positioning

3. Change Failure Rate

Change failure rate represents the percentage of deployments that result in degraded service requiring immediate remediation. This DevOps metric to measure quality helps teams balance speed with stability.

Elite performers maintain failure rates below 15%, demonstrating that velocity doesn't have to compromise reliability. Tracking this metric helps teams:

Identify quality issues in the development process

Improve testing strategies

Refine deployment procedures

Build confidence in automation

4. Mean Time to Recovery (MTTR)

Mean time to recovery measures how quickly teams restore service after an incident. Elite performers recover in under an hour, while low performers might take days or weeks.

Fast recovery times indicate:

Effective incident response procedures

Good monitoring and alerting systems

Strong team collaboration

Well-documented systems

Understanding the relationship between MTTD and MTTR helps teams optimize their entire incident response lifecycle.

Important DevOps Metrics Beyond DORA

While the four key performance indicators provide essential insights, additional metrics help teams measure DevOps success comprehensively.

Application Performance Metrics

Application performance directly impacts user experience and business outcomes. Key metrics include:

Response Time: How quickly your application responds to requests

Error Rate: Percentage of requests that result in errors

Throughput: Number of transactions processed per unit of time

Resource Utilization: CPU, memory, and storage consumption patterns

DevOps Process Metrics

Process metrics provide insights into team efficiency and workflow health:

Code Review Time: Duration from pull request creation to merge

Build Success Rate: Percentage of builds that complete successfully

Test Coverage: Percentage of code covered by automated tests

Pipeline Duration: Total time from commit to production deployment

Team and Culture Metrics

DevOps success depends heavily on team dynamics and culture. Metrics to measure include:

Developer Satisfaction: Regular surveys measuring team morale

On-Call Burden: Distribution and frequency of after-hours incidents

Knowledge Sharing: Number of documentation updates and team learning sessions

Cross-Team Collaboration: Frequency of joint initiatives and shared responsibilities

How to Measure DevOps Metrics Effectively

Collecting metrics without proper context or action plans wastes valuable time. Effective measurement requires strategic planning and consistent execution.

Establish Baselines

Before implementing changes, document your current performance across all key metrics. This baseline enables you to:

Track improvement over time

Set realistic goals

Identify areas needing immediate attention

Celebrate meaningful progress

Automate Data Collection

Manual metric collection introduces errors and consumes valuable engineering time. Automation ensures:

Consistent, accurate data

Real-time visibility

Historical trend analysis

Reduced administrative overhead

Create Actionable Dashboards

Metrics provide value only when teams can easily access and understand them. Pairing dashboards with a public and private status page gives both users and stakeholders a clear view of ongoing incidents and system health. Effective dashboards:

Display metrics in context with targets and trends

Highlight anomalies requiring attention

Enable drill-down for detailed analysis

Support different stakeholder perspectives

Regular Review Cycles

Schedule regular metric reviews to maintain momentum:

Daily standups: Review deployment frequency and current incidents

Weekly retrospectives: Analyze change failure rates and recovery times

Monthly planning: Assess trends and adjust strategies

Quarterly reviews: Evaluate overall DevOps performance and set new goals

DevOps Best Practices for Metric Implementation

Successful metric programs follow proven patterns that balance measurement with action.

Start Small and Expand

Begin with the four key metrics before adding complexity. This approach:

Prevents analysis paralysis

Builds measurement habits

Demonstrates quick wins

Creates buy-in for expanded programs

Focus on Trends, Not Absolutes

Comparing your metrics to industry benchmarks provides context, but improvement trends matter more than absolute values. Your unique context influences what constitutes good performance.

Avoid Metric Gaming

When metrics become targets, teams might optimize for numbers rather than outcomes. Prevent gaming by:

Balancing competing metrics (speed vs. quality)

Focusing on customer value

Regularly reviewing metric relevance

Celebrating learning from failures

Connect Metrics to Business Outcomes

DevOps metrics help teams deliver business value. Connect technical metrics to business KPIs:

Deployment frequency → Feature delivery speed

Lead time → Time to market

Change failure rate → Customer satisfaction

MTTR → Revenue protection

Common Pitfalls in DevOps Metrics Tracking

Avoiding common mistakes accelerates your journey to data-driven DevOps excellence.

Measuring Everything

More metrics don't equal better insights. Too many metrics create:

Information overload

Unclear priorities

Wasted collection effort

Decision paralysis

Ignoring Context

Metrics without context mislead teams. Always consider:

Team size and structure

Application architecture

Industry requirements

Organizational maturity

Focusing Only on Speed

While deployment frequency and lead time matter, neglecting quality metrics creates technical debt and customer dissatisfaction. Balance is essential.

Neglecting Team Health

Pushing for metric improvements without considering team capacity leads to burnout. Sustainable improvement requires healthy, engaged teams.

Implementing DevOps Principles Through Metrics

Metrics serve as guideposts for implementing core DevOps principles effectively.

Continuous Improvement

Regular metric reviews identify improvement opportunities. Use data to:

Prioritize automation investments

Refine processes iteratively

Validate improvement hypotheses

Share successful practices

Shared Responsibility

Metrics visibility across teams breaks down silos. When everyone sees the same data:

Developers understand operational impact

Operations teams appreciate development challenges

Business stakeholders grasp technical constraints

Collaboration naturally increases

Feedback Loops

Metrics close feedback loops throughout the software development and delivery process. Faster feedback enables:

Quick course corrections

Reduced waste

Improved learning

Better decision-making

The Role of Monitoring in DevOps Success

Effective monitoring underpins successful DevOps metrics programs. Without proper monitoring:

Metrics lack accuracy

Incidents surprise teams

Recovery takes longer

Improvement stalls

Modern monitoring approaches include:

Synthetic monitoring: Proactive checks preventing user-facing issues

Real user monitoring: Actual user experience data

Infrastructure monitoring: System health and resource metrics

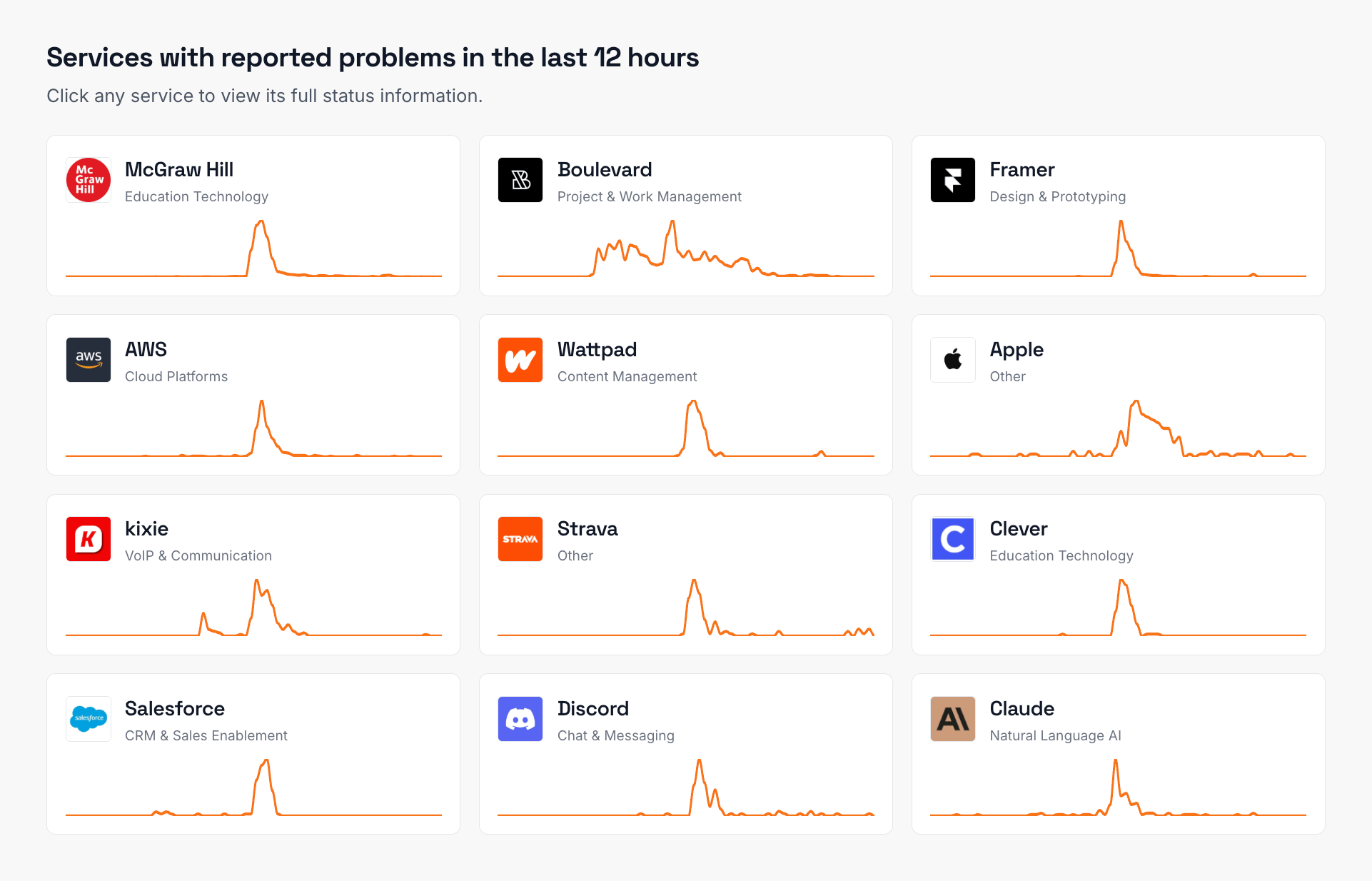

Third-party service monitoring: External dependency tracking

For teams relying on multiple external services, tools like IsDown aggregate status information from various providers, giving teams comprehensive visibility into their entire dependency chain. This visibility proves crucial for maintaining reliable services in today's interconnected environments.

Moving Forward with DevOps Metrics

Implementing effective DevOps metrics and KPIs requires commitment, patience, and continuous refinement. Start with the four key metrics, expand thoughtfully, and always connect measurements to meaningful outcomes.

Remember that metrics serve teams, not the reverse. Use data to inform decisions, celebrate progress, and identify opportunities. With the right approach, DevOps metrics transform from administrative burden to competitive advantage.

The journey toward data-driven DevOps excellence begins with a single metric. Choose one that matters to your team, start measuring today, and build from there. Your future self will thank you for the insights and improvements that follow.

Frequently Asked Questions

What are the most important devops metrics and kpis to start tracking?

The four key metrics in DevOps you should start with are deployment frequency, lead time for changes, change failure rate, and mean time to recovery (MTTR). These DORA metrics provide a comprehensive view of your team's performance and are proven indicators of high-performing organizations. Once you master these, you can expand to include application performance metrics and team health indicators.

How often should we review our DevOps metrics?

Review cycles depend on the metric type and your team's maturity. Daily standups should cover deployment frequency and active incidents. Weekly retrospectives should analyze change failure rates and recovery times. Monthly reviews should assess trends and adjust strategies, while quarterly reviews evaluate overall DevOps performance against goals. The key is consistency and acting on insights rather than just collecting data.

What's the difference between DevOps metrics and traditional IT metrics?

DevOps metrics focus on flow, feedback, and continuous improvement across the entire software delivery lifecycle. Traditional IT metrics often emphasize stability and uptime in isolation. DevOps metrics like deployment frequency encourage frequent changes, while traditional metrics might discourage change to maintain stability. The DevOps approach balances speed with reliability through metrics that measure both aspects.

How do we prevent teams from gaming DevOps metrics?

Prevent metric gaming by balancing competing metrics (speed vs. quality), focusing on customer value over numbers, and celebrating learning from failures. Use metrics like change failure rate alongside deployment frequency to ensure teams don't sacrifice quality for speed. Regular reviews of metric relevance and connecting technical metrics to business outcomes also helps maintain focus on genuine improvement.

Which tools help automate DevOps metrics collection?

Modern DevOps platforms like GitLab, GitHub Actions, and Azure DevOps provide built-in metrics tracking. Specialized tools like Datadog, New Relic, and Prometheus offer comprehensive monitoring and metric collection. For tracking external dependencies that impact your metrics, status page aggregators consolidate information from multiple providers. Choose tools that integrate with your existing workflow to minimize friction.

How do we measure DevOps success in a microservices architecture?

Microservices architectures require service-level metrics in addition to system-wide measurements. Track the four key metrics for each service independently, then aggregate for overall performance. Pay special attention to inter-service dependencies, API performance, and distributed tracing. Consider implementing service level objectives (SLOs) with error budgets to balance innovation with reliability across your microservices ecosystem.

Nuno Tomas

Founder of IsDown

Nuno Tomas

Founder of IsDown

The Status Page Aggregator with Early Outage Detection

Unified vendor dashboard

Early Outage Detection

Stop the Support Flood

Related articles

Never again lose time looking in the wrong place

14-day free trial · No credit card required · No code required